Historical Series: Bionanotechnology

Bionanotechnology and the Computational Microscope

By Lisa Pollack

December 2013See other related articles by L. Pollack

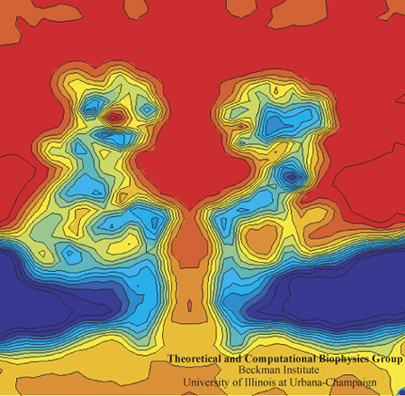

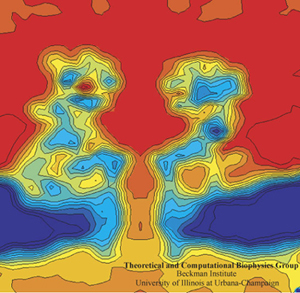

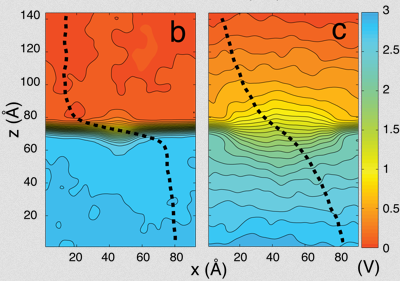

The computational microscope provides a rare view of the electric field inside a nanopore of alpha-hemolysin.

Imagine how the prospectors who descended on California in 1849 would have fared had they possessed a functionalized hand that could simply reach into the Sacramento River and collect its gold. With their pans and sluice boxes the miners still missed the tiniest gold particles–that is, they had no way to capture the nano-sized flecks of the precious metal that graced the many rivers of the Sacramento River Valley. But what if a synthetic protein was crafted that specifically bound to gold and could aid in trapping gold nanoparticles. When Klaus Schulten heard about just such a mechanism, from the very scientist who had tediously labored to find a peptide sequence that affixed to gold, Schulten was intrigued. This eccentric scientist told Schulten he had indeed found a way to isolate gold from the Sacramento River, but wanted Schulten's help in visualizing and explaining why the protein segment itself bound so well to gold. The situation warranted a tool to literally envisage the nano-world of the gold-binding peptide, and luckily Schulten had such a tool in his arsenal: the computational microscope. When Schulten agreed to illuminate the atomic details of the gold-protein system in 2001, he did not realize that this would also launch his entry into the field of bionanotechnology. But he soon discovered that the computational microscope was virtually ordained for bionano-applications.

Both the computational microscope and bionanotechnology are relative newcomers on the timeline of the history of science. Schulten coined the term “computational microscope” around 2005 to describe the imaging technique that can offer what no other traditional microscope can in terms of viewing nano-systems; furthermore, it is composed of unusual constituent materials. Scientific information from chemistry and physics, clever algorithms, powerful computers, and human imagination are but some of the elements comprising this novel microscope. Ideal for capturing behavior of living objects like proteins or ribosomes, the computational microscope, it turns out, can also illuminate what happens when you bring a biomolecule into contact with an inanimate nanomaterial, such as the protein on gold mentioned above. Bionanotechnology is the marriage between two fields that have usually been studied separately: biotechnology and nanotechnology. When experimentalists started to bring “wet” biological materials into contact with traditionally “dry” nanodevices, the combined systems of “wet” plus “dry” were so small that light microscopes did not have the resolving power to see the resulting interactions. What's more, electron microscopy required freezing and thus could not capture progressing behaviors over time or image under natural (i.e. wet) conditions. Enter the computational microscope and Klaus Schulten's research team, the Theoretical and Computational Biophysics group, located in the Beckman Institute for Advanced Science and Technology at the University of Illinois.

“The Beckman Institute is the United Nations of Science,” says Schulten about the place he has called home for twenty-six years. “All of the different sciences count equally; it's not that one of them is better than the other.” The premise of the institute at its very conception was “multidisciplinary.” And Schulten is clear to point out he chose to go to Beckman because he needed to combine science with computer engineering principles to accomplish his goal of simulating biological systems on a grand scale. On top of that, the science he studied was a combination of biology, physics, and chemistry. His move to Beckman, with its accompanying turn to the engineers, however, was often remarked on with derision by colleagues. Yet Schulten still forged onward.

While Schulten embodies a typical scientist working at Beckman, as he entered the field of bionanotechnology at the start of the new millennium, he was well poised to illuminate such technology since the work often required teaming up with engineers or scientists from different fields to improve the sensors they studied. And while many scientists may shun applied science or technology, considering it not pure enough research, Schulten sees science and technology as partners. So it was natural for him to work on projects involving technology, and develop multidisciplinary collaborations, especially with experimentalists. He has been doing this to some degree all his professional life. And although the theoretical side of bionanotechnology–that is, simulating the creative systems the experimentalists imagine–is just starting to become recognized in this burgeoning field, Schulten says the experimentalists are thrilled to work with him and need little convincing of his potential contributions.

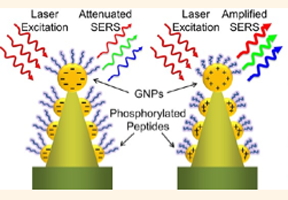

While Schulten entered the simulation side of bionanotechnology early, at that point in his career he had been honing the computer simulation of biological processes for almost two decades previously, and had a well-working technology in his group at Beckman. “Some of the questions that were relevant in bionanoengineering were actually pretty straightforward questions where the computer was already pretty good,” remarks Schulten. “And so basically I saw the computer could contribute to this field.” And what the computer offered was an unraveling of the measurement process going on with all the new and unconventional devices that Schulten's collaborators were fabricating. In the laboratory the scientists would measure things, some never before undertaken by anyone, and the computer could help answer questions such as: What does the measurement mean? Where does it come from? And how should I interpret it? For example, why does it take longer for one end of DNA to go through a channel compared to the other end? Why is methylated DNA more mechanically stable? Why is my kinase sensor not working properly? The computational microscope helped provide answers to all of these questions, as well as many more, and in some cases aided optimization of the measurement process.

The technologies the computational microscope are demystifying in this new field of bionanoengineering touch on some of the hottest topics in biology: simplified and inexpensive DNA sequencing, proteins (the kinases) that often go awry in cancer and disease, and epigenetics. Nanodevices have been around for a long time and many forms of them are even found in common household products. Now nanotechnology is being combined with biological systems to make very good devices. Part of that is because the nanoscale is the smallest scale where one can still measure chemistry. As one nanometer is about the width of a typical protein, sensors can go almost to the size of a protein or a molecule and still take instructive measurements. Basically with such small samples, the bionano-device is tailored for single-molecule sensitivity, which is often desired, and makes this such a promising technology. For example, imagine the headaches saved at every level if biopsy of cancer cells only required a tiny amount, because the sensor for it worked at single-molecule specificity. But this tiny expanse of nanodevice plus biomolecule is the perfect size for Schulten's computational microscope. While nanotechnology is now considered “sexy,” as he once put it, Schulten is clear to point out that he has been simulating nanoscale systems for forty years, for that is the scale at which processes happen in the cell. So a device with dimensions of 10nm × 10nm × 10nm is an ideal fit for the computational microscope.

This article will examine many fascinating nanodevices related to biotechnology, and specifically the role of the computational microscope in elucidating the inner workings of these bionano-applications. A theme running throughout is the multidisciplinary aspect of this work, for Schulten has teamed up with researchers from many disparate fields to work on the technologies revealed here. Indeed, as the Beckman mindset stresses work across many disciplines, this story embodies what happens when creative minds from all sorts of subfields come together to tackle a problem. Although the group at Beckman has worked on myriad projects in bionanotechnology since 2001, Schulten revealed he proceeded with no preconceived master plan. Most of his collaborations have happened by chance; topics appeared for consideration and he took them on. While the word “Technology” is part of the title of the Beckman Institute, Klaus Schulten is fully aware of the historical place of technology in science and how it shaped some of the scientific puzzles he had to solve when he entered this field of bionanotechnology. “Yes, you have to be intelligent and work hard,” he says about moving forward in research. “And you also need good opportunities. And opportunities now come very often through technology.” Schulten believes the many new systems and approaches of emerging technologies challenge the scientist with dilemmas to solve. Indeed, combining nanotechnology with biology in simulations has enhanced the range of the computational microscope, for it has uncovered a riveting array of new vistas, many of which we will visit in the coming pages.

We Don't Even Ignore You

“When I went to Beckman people said: Oh, Klaus, where are you going? You are going to the engineers. This will be your intellectual downfall. Don't do it!” The people, in this instance, were other scientists (read: not engineers) who considered their work pure science, and certainly did not value collaborations with engineers or work focused on technology. At the time Schulten made his decision to become part of the Beckman Institute, around 1987, he was a theoretical physicist who studied biology. To take his research to the next level, he realized that he needed computer technology and input from computer engineers. The ridicule from colleagues about embracing computer technology was not new to Schulten, and did not dissuade him from joining Beckman; however, his path to the University of Illinois (home of Beckman) was anything but straightforward.

The homemade supercomputer Klaus Schulten built in 1988.

In the 1980s Klaus Schulten had a productive career as a theoretical physicist at the Technical University of Munich, where he did some of the most highly-cited work in his career. But for him personally, the atmosphere was a bit odd. He started to use computers more and more for his work in theoretical biology, and he paid a price with his colleagues. “Somehow today people accept computing more and more. But in those days,” Schulten says of the 1980s, “people thought literally that anybody who computes was a primitive person.” Any time Schulten used a computing word with his colleagues in the course of a normal conversation, they made jokes and even sexual innuendoes about it, and Schulten felt like a total idiot. Despite the hostile undercurrents, however, that didn't stop Schulten from undertaking a perilous mission: building his own supercomputer on a meager budget.

The plan to build a homemade supercomputer stemmed from exciting work just down the road from Munich, undertaken by friends of Schulten's at the Max Planck Institute for Biochemistry. By 1987 these friends, Hartmut Michel and Johann Deisenhofer, had crystallized and then determined a high-resolution structure of a membrane protein, a task once purported to be impossible. For this feat, they were awarded a Nobel Prize in 1988. Schulten had a front row seat to all the action, and when the structure was determined it was clear that exciting new calculations on a membrane protein could be run for the very first time. And Schulten wanted to be the first to do them. But a big roadblock stood in his way. For this type of calculation, Schulten would need to commandeer a supercomputer at some center, devoted exclusively for a year to his calculation. No center would be willing to accommodate this request.

Right around this time of the newly unveiled structure of the membrane protein, at the university in Munich, two young students in their early twenties assured Schulten he could indeed have his own supercomputer–they would build it themselves with his grant money. While this story is told in full detail elsewhere (click here to read the history of NAMD), suffice it to say that Schulten took them up on their offer and, risking everything, did finally procure a supercomputer all to himself.

With the promise of such exciting and original calculations to be run, and the unconventional, homemade parallel supercomputer under construction, none of Schulten's colleagues in the Munich physics department paid any attention to him. Many had no interest in hearing about his plans for his computer, and most were just plain unaware. To summarize his treatment in Munich, Schulten here likes to point to a Bavarian saying: “I don't even ignore you.” Ignoring means turning a head to look the other way, “but when you don't even ignore it, it's totally not there,” Schulten explains. “You don't make any attempt even to look the other way because you pretend it's just air. It's just nothing.” Despite the indifference of his colleagues, Schulten marched forward with his plans to start using the computer more as a tool in his research, did not think about possibly finding a better environment to do his work, and even turned down a job offer that was unsolicited. But Schulten's rejection of this job offer was not the end of the story.

Intellectual Downfall in a Big Black Hole

Klaus Schulten had a funny feeling about the way the other physicists were acting after he gave his guest lecture in their department. It was 1985 and Schulten was temporarily living in the United States, on sabbatical from Munich. Since the University of Illinois at Urbana-Champaign was one of the few physics departments in the world at that time actually active in biophysics, Schulten agreed to give this invited talk in Urbana. He thus found himself in a small Midwestern college town, surrounded by flat farmland in all directions, which was a stark contrast to the skyline of New York City where he was spending his sabbatical. In between his lecture and the requisite evening dinner with the Illinois physics faculty, Schulten managed to sneak in a call to his wife. “I said to her: They act so strange. I hope they don't offer me a position, because I don't want to go to this god-forlorn place!”

The big black hole Klaus Schulten observed at the Beckman Institute construction site. Ted Brown, Beckman's first director, is in the foreground. Photo courtesy of the Beckman Institute.

Schulten's instincts were correct, however. Some of the faculty had an immediate meeting with their department committee and were ready by dinner to make an offer. Schulten respectfully declined. But the physicists in Urbana were undaunted and suggested Schulten and his wife, also a scientist, spend a term visiting at the university and getting to know the culture of the place, how they did science, before making a final decision. So Schulten moved his family to the Midwest from Munich in 1987 to give the University of Illinois a closer inspection.

Hours from a major city, surrounded by a “corn desert” as it's called in local vernacular, unrelentingly flat, and near no large water bodies to speak of, Schulten was himself surprised to discover that he very much enjoyed his trial period at the University of Illinois. He admits that many Europeans, including himself, considered the Midwest boring. But it wasn't the landscape that swayed this former urban resident. However, the landscape does contain some of the richest farmland on earth, and Schulten found some riches of his own. In fact, many factors coalesced to change his mind, the most important was probably a hole in that rich dirt.

Upon arriving in Illinois in the spring of 1987, the timing was such that Schulten found himself immersed in an exciting new development taking place at the university: the genesis of the new supercomputer center. In 1985, the National Science Foundation (NSF) announced it would devote $200 million in grants to create four centers in academia to bring supercomputer power to civilian researchers. Up to that time, powerful computers were mostly in the hands of U.S. defense department workers, and some industries, such as aircraft and automobile manufacturers or oil companies. But the four centers created in 1985 were specifically for researchers not in the aforementioned fields; Schulten was the kind of target audience the NSF hoped to reach with its new initiative. In Urbana, one of the original four places chosen by the NSF, the National Center for Supercomputing Applications (NCSA) opened for business in January 1986. Schulten, there in spring 1987, says it was a very fresh and exciting time, with so many new hires on staff with impressive backgrounds. They gave him a wonderful place to work, and he had access to fantastic computing facilities, particularly graphics equipment he made liberal use of. “It was definitely one of the JEWELS in my eyes of this place,” reiterates Schulten about the NCSA at the University of Illinois, “that they had this very concerted computational effort that I had been missing in Munich.”

While intrigued by how the university combined computing and science, Schulten also was drawn to the reputation of the physics department. His wife also felt comfortable in Urbana, with many friends in the chemistry department. However, Schulten points to one defining moment that really cemented his decision to move to the Midwest. His colleagues in Urbana told him that if he accepted their job offer, they could provide him with a laboratory in the new institute. When Schulten asked where it was on campus, they told him it was just being built and they would show him the construction site. What Schulten saw was just a hole in the ground, in the rich black glacial moraine of the Illinois soil. “It was really the largest black hole I had ever seen in my life, and it was so big that I realized, this is really a major institute,” explains Schulten. “So basically my decision to come here was because of the black hole that was supposed to become the foundation of the Beckman Institute.”

The Beckman Way

The roots of the Beckman Institute go all the way back to a small town in Illinois just over an hour's drive north of Urbana. There in the city of Cullom, Arnold Beckman was born, and after serving in World War I, got a BS degree in chemical engineering and a year later an MS in physical chemistry in 1922 from the University of Illinois at Urbana-Champaign. Arnold would go on to get a PhD from the California Institute of Technology, where he worked for a while until he founded his own company, Beckman Instruments. Arnold Beckman was an inventor, and two of his most famous inventions were the pH meter and the DU spectrophotometer. While spending most of his time in California running his company, Arnold Beckman kept close ties to his home state, and served in some capacities at the university in Urbana, on boards and cabinets. He and his wife, Mabel, made a donation in 1980 to the university, which established the Beckman Fellowships for junior faculty.

So when some administrators of the University of Illinois in 1983 sat down to brainstorm about possible new projects that would be of such imaginative scope that they could attract the attention of private donors, and put the flagship university on the cutting edge of the research world, it was natural to approach Arnold and Mabel Beckman. But first they had to formulate an ambitious project for consideration. The resulting 1985 proposal was for a “multidisciplinary” initiative, one which would span physical sciences and engineering, as well as life sciences and behavioral sciences. Part of the proposal submitted to the Beckmans highlighted the already-strong interdisciplinary research going on at the University, and the new supercomputer center under development.

The large nature of the gift the Beckmans finally proffered even surprised the people working hardest to attract the donations for the new institute. At $40 million dollars, this was historically the largest single donation to the University of Illinois up to that point in October of 1985, and was additionally the largest gift made to any public university until that time. Ground breaking began in October 1986, and that winter Klaus Schulten viewed the big black hole that would become his future home, the Arnold and Mabel Beckman Institute for Advanced Science and Technology.

“The Beckman Institute was for me,” says Schulten, “a protectorate for interdisciplinary work, and a very beautiful one at that.” He is clear to point out, in retrospect, that he needed the umbrella of this institute to accomplish the goals he had set for himself as a physicist doing theoretical biology. First of all, he wanted to combine science and technology, in this case using the technology of the computer to aid and complement his studies in theoretical biology. To get science and computer technology together, he needed to go to the Beckman Institute, a place where he no longer heard cries of “it will be your intellectual downfall if you go to the engineers.”

The Beckman Way, according to Klaus Schulten, a resident of the Beckman Institute from its inception.

Schulten does not only want to use technology to facilitate his work, he wants to study it as well, research also consonant with the Beckman Institute. In fact, this article covers many promising new biology-related technologies at the nano-scale. These biotechnologies have been demystified by the computational microscope, a tool combining biology, physics, chemistry, and hardware and software, and honed at the Beckman Institute since 1989. A student of history, Schulten is aware of the close relationship between science and technology on the timeline of modern achievements. Not only has much good science come about through close contact with technology, but science can also contribute to emerging technologies. While Schulten appreciates the historical connection between science and technology, he also points to his roots in rural Westphalia, where he grew up surrounded by farmers. “I am much more a person who loves philosophy and mathematics,” explains Schulten, “but who just comes from a background that is much more grounded in reality, in my case literally in agricultural reality, in dirt.” He sees similarities between himself and his boyhood village farmers, who talked about lofty ideas but also had their feet on the ground. Schulten feels that having his feet grounded in the “dirty business of computing” is the way to get things done.

The atmosphere at the Beckman Institute is best encapsulated by Schulten's quote about it, that “character is important, but not what kind of union card you carry.” He joined the institute for two reasons, to be able to conduct interdisciplinary work that truly treats all partners with respect, and to combine science and technology. He calls this “The Beckman Way,” in that science drives technology, and technology drives science. Schulten likes to point out that one instance of the first category–science driving technology–is how technology can pose new questions to scientists. For example, when first introduced, the atomic force microscope, which worked by a tiny tip tapping the surface of a sample to register a two-dimensional image, scientists discovered the contact with the tip could damage so-called soft biological samples. The question scientists then asked was, how could they re-invent this technology so it would not damage their soft samples? And non-contact mode was born.

Schulten is also clear to point out that the Beckman philosophy he coined is a two-way street, in that technology can drive science–with the obvious case that technology can give researchers the means to do their science. One example of this is the electron microscope, which made a whole subset of biological findings possible in the twentieth century. Closer to home, in Schulten's world, the advances over two and a half decades in parallel computing have allowed Schulten to focus on the question that has motivated him for forty years: How do I describe biological organization? He knows this requires studying processes and societies inside a cell, which for Schulten means considering millions of molecules at once. And the technology of the parallel computer with its accompanying algorithms (many designed by his own group) has enabled Schulten to get closer and closer to his goal of explaining biological organization. Additionally, Schulten is using the technology behind the computational microscope to illuminate the bionanoengineering systems discussed below. His years of tweaking the computational microscope paid significant dividends indeed when it came to bionano-systems.

Technology, in fact, was one of the reasons Schulten got funding in 1990 from the National Institutes of Health, for his NIH Center for Macromolecular Modeling and Bioinformatics, which coincided more or less with his move to the Beckman Institute. These centers are funded by the NIH to create unique, cutting-edge technologies and software to advance the biomedical field. Schulten cites two reasons for his successful grant application. First and foremost was the parallel supercomputer he risked everything to assemble. “I had built this computer and thereby demonstrated that parallel computing can be useful in biology when you model macromolecules,” Schulten offers. Until that time, people were using only single processor computers, and parallel computing was in its infancy. The NIH, according to Schulten, had several centers already working on the premise of going parallel, but Schulten was farthest ahead with his newly constructed and whirring parallel machine. NIH was interested in giving this technology more opportunities to blossom. In fact, without containing his laughter, Schulten likes to point to the site visit for the 1990 grant, where he prominently displayed the homemade computer to show the visitors, and hoped they would be impressed by its blinking light, a sign that it was working hard at its task. He reiterated to the site visitors that he couldn't turn it off, that it had to run for two years continuously. With the abundance of impressive graphics displays available in biomolecular modeling these days, a blinking machine would not be considered of much merit during a site visit. Schulten also thinks his work at the time on the brain was an attractive topic for the NIH. “I think they liked that too,” emphasizes Schulten, “that we were not just a one-trick pony show.” Schulten's center was so successful that he would receive funding every five years for the next twenty-five years.

The Computational Microscope: A Tool for Discovery

“One time I published a paper, and I got a referee report back, from a very famous biologist at Brandeis University, and he wrote: This is the best psychoanalysis of a molecule that I ever read!” Schulten likes to joke about this incident that happened to him, but it really speaks to the power of the computational microscope. He uses the computer as a tool, a microscopy even, to intensely inspect a biomolecule to learn its properties. While the computational microscope may provide a gateway to the many facets of proteins and viruses and ribosomes, which are all active research topics in Schulten's group, incidentally, it is not just a technology for visualizing; it is also a tool for discovery.

It has literally been forty years in the making, the computational microscope. The process that led to photography was also created by a physicist, Louis Daguerre, who labored for many decades before he developed the first daguerreotype in 1839. But unlike the still pictures captured in photography, the computational microscope yields results like moving pictures. It takes snapshots of how the system in question behaves over time, and the subsequent “trajectory” is basically a moving animation of the biomolecules. But what goes into generating such a trajectory? The first stage is information from chemistry, physics, and mathematics. Consider a protein with many atoms. Chemistry provides the forces the atoms exert on each other, for example, from chemical bonds. And then Newtonian physics yields the specifics about how the forces from above make all the atoms in the protein move. Next, mathematics reveals how to compute the interactions and the motions. Couple that with continual refinement of algorithms, and the perpetual increase in computer power over the years, and the computational microscope was honed to the point that it is now in the business of discovery.

In Schulten's group, there are two software products, which are both over twenty years old, that specifically undergird the computational microscope. One is NAMD, a computer program that is tailored to work optimally on parallel machines and is mostly geared towards molecular dynamics calculations. The homemade supercomputer mentioned above was the impetus that propelled Schulten into the world of parallel computing and the creation of NAMD, a history that is told in more detail here. While a light microscope is made of brass tubes and glass lenses, in the computational microscope the brass tubes might be considered NAMD and the glass lenses would be VMD, the other major software product produced in Schulten's group. VMD, or Visual Molecular Dynamics, is a visualization and analysis software and is often used in tandem with NAMD. This graphics software has many tools available to help evaluate molecular dynamics trajectories, and grew out of Schulten's need to visualize and “psychoanalyze” the data he was generating. A comprehensive history of VMD can be found here, which highlights many of its unique features.

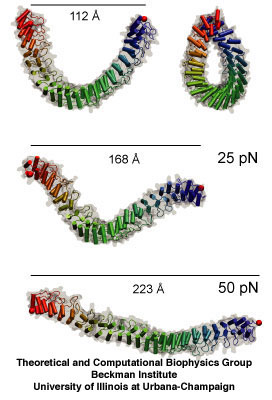

Ankyrin, whose mechanical properties were first unveiled in 2005 by Klaus Schulten's group.

To reach the point of categorizing the computational microscope as a tool for discovery, the many factors that define it had to be continually improved, such as simulation time and the size of the system in question. While molecular dynamics can be traced to the 1950s, where scientists first used the methodology to simulate liquids, a big step came in 1977 with the publication of a paper by Martin Karplus, applying molecular dynamics for the first time to a protein. This had a big effect on Schulten. In graduate school at Harvard, Schulten sat down the hall from the two students who also worked with Schulten's advisor, Karplus, on this initial foray into molecular dynamics. “I realized that this computational approach,” Schulten says of the 1977 molecular dynamics paper, “opened new doors to describe problems that you couldn't do with a purely theoretical approach that I had taken until that time.” As mentioned previously, Schulten really paid the price for turning to computing in the 1980s, as he often was made to feel like an idiot by his colleagues. But his relentless efforts, especially at the point at which he joined the Beckman Institute, to get computer engineers involved in his research efforts was one of the reasons the computational microscope grew to the point where he believes it is now a tool for discovery.

Not everyone is convinced, however. Skeptics abound. Even with the 2013 award of the Nobel Prize in chemistry to Michael Levitt, Arieh Warshel, and Schulten's advisor, Martin Karplus, for instigating the use of computer methods to elucidate large complex chemical structures, which is a big validation for molecular dynamics, Schulten is sure there will still be skeptics; but because of the Swedish award, Schulten thinks it may not be as difficult as before. He once wrote a paper to the journal Structure, about the discoveries made via the computational microscope, only to have it rejected. On most of his other papers to this journal he teamed up with experimentalists, but not in this specific manuscript. Schulten objected, and it was clear that there was some disagreement on the editorial board about Schulten's paper, and that some of the board were in support of Schulten. They then reached a compromise, and Schulten was told he could write an “essay” for the journal about this topic. The resulting piece, “Discovery Through the Computational Microscope,” was published in 2009, and is quite fascinating reading.

The main point of the 2009 essay is that molecular dynamics is now sophisticated enough to make discoveries, which are then later verified by experiment; it is not necessarily the other way around anymore. Schulten cites as one of his biggest successes his work on ankyrin repeats. These protein pieces are related to how cells sense mechanical cues. Some mechanosensors in living cells have ankyrin repeats attached to them at one end. When the structure of these ankyrin proteins was solved, it was clear that these molecules had to be mechanically sensitive, and the next obvious question was to determine just how mechanically sensitive they were. This was around 2004. Schulten and graduate student Marcos Sotomayor got immediately on the issue and ran simulations to determine the spring-like behavior of the repeats. They found that the protein was a much more sensitive spring than anything seen before. If this spring were hanging from the ceiling, and a feather was attached to it, the feather would pull the spring right to the floor. And they discovered this first, beating out two other experimental teams the theorists knew were also studying the mechanical properties of ankyrin. While Schulten and Sotomayor were able to publish their spring constant in Structure, ironically the experimental team that confirmed this very sensitive behavior of the repeat got a paper in Nature in 2006, although they had to cite the simulation results from the team in Illinois. This is just one example of many illustrating that discoveries can be made with the computational microscope.

“There are many skeptics, and then there are also many people who are positive,” notes Schulten about molecular dynamics. “And very often, they are the same people.” But how could this be? In a molecular dynamics calculation, forces are always being calculated; the particles of the biomolecule are constantly moving closer together or farther apart and forces are calculated over and over. The hitch is that the forces are described in a very primitive way in order to calculate fast enough. In other words, force quality is a slave to speed.

“If people never did molecular dynamics,” reflects Schulten with all sincerity, “and you would tell them today: This is what we want to do. You wouldn't find a single person in the whole world who would say: This is a good idea. Do it!” That being said, when you are dealing with thousands of atoms, and sometimes millions of atoms, the miniscule detail is not that important, and it is still possible to get critical features right. Schulten also likens it to the attitudes in physics, in which discipline Schulten is trained. “The physicists have been extremely successful with theory, even though they are more qualitative,” he clarifies. He points to two cases, the conductivity of copper, and superconductivity, and the subsequent theories that describe them. They work remarkably well, and accuracy is not such a critical point–same thing with molecular dynamics. “So that is why there's a critique, because it's not a very precise science in a way,” Schulten says about the tension over molecular dynamics.

But the other side to this argument is that there are many things described well by molecular dynamics. Often there is no other way to look at the physical mechanism and all its details. The trajectory, or movie of, for example, a protein, is very often the only way to see how the protein works. And since light microscopes can't see individual proteins, and electron microscopes often freeze their samples, molecular dynamics is the only way to see what is going on at times. “And now the situation today is that people have changed dramatically, to actually being glad that they see the movie,” concludes Schulten about former skeptics. He's even seen instances where manuscripts by experimental groups have been rejected because they didn't include a molecular dynamics simulation. As we will see in the following pages, molecular dynamics sheds light on the physical mechanisms underlying the nano-sensors, and often provides very detailed movies of the inner workings of novel devices in the growing field of bionanoengineering.

Gold Binding Peptides

Klaus Schulten's curiosity was piqued when he finally met Mehmet Sarikaya, most likely during 2001. A professor in the Materials Science and Engineering department at the University of Washington, Sarikaya had a reputation as quite a character, according to Schulten. Sarikaya was very interested in biomemetics, the science of using the principles behind nature's biological systems to solve problems in engineering. What Schulten heard that day was that Sarikaya had isolated a protein segment, or peptide, from a large array of mutated peptides, that actually bound to gold crystals. But his peptide only bound to a certain crystal face of the gold, and Sarikaya didn't know why. In the course of their discussion, Sarikaya phrased the problem thus: We want to purify gold particles in the Sacramento River, but first we need to detect them, and this gold binding peptide I've discovered offers just that tool, but I don't know why it works.

A peptide sequence specifically crafted to bind to gold, which Rosemary Braun and Klaus Schulten illuminated with molecular dynamics.

Schulten realized it would be possible, in theory, to run a molecular dynamics simulation with peptide plus gold, but knew it had never been done before. This would mark his first foray into the world of bionanoengineering, for it combined biology (protein in solution) with a nanosystem (gold particles). And this would begin a long string of collaborations with engineers who designed innovative bio-sensors but had no microscopy available to view the particulars going on at the nanoscale. Schulten realized that molecular dynamics, with the new twist of adding gold nanosurfaces, could help scientists visualize why peptides and gold in solution were forming large flat crystals (that were always the {111} crystal plane). And he had the perfect woman in mind for the job.

Rosemary Braun never anticipated that she would become so fascinated by biology. A physics graduate student at Urbana-Champaign, she only knew she wanted to gravitate to theoretical and computational studies. But Klaus Schulten's class on Non-Equilibrium Statistical Mechanics really resonated for her. “It was FAR and AWAY my favorite class in my entire graduate school experience at Urbana,” Braun remarks. “It was just a phenomenal class.” While the subject matter was in itself interesting, Schulten used examples from biological physics to illustrate the various processes he taught about. Braun was then drawn to biology, and so it seemed obvious to choose Schulten as her advisor when that time came.

Schulten presented to her the problem of the gold-binding peptide, which was the second project she worked on after joining the group. She knew she had to run a molecular dynamics simulation of the entire system, using different crystal faces of gold to find out why the peptide preferred only one specific crystal plane, but there were challenges. The peptide sequence that Sarikaya had isolated was just a mutation, not a well-known peptide whose structure had been determined and studied. To run a molecular dynamics simulation, a structure was necessary; all Schulten and Braun had was the protein's sequence (that is, the order of the amino acids that form the protein segment). Since it wasn't a modification of a naturally occurring protein either, there was no structure like it to even use as a starting point, as a homolog. So Braun and Schulten had to use structure-prediction tools. It should be noted that predicting structure merely from a protein's sequence was then, and still is now, a very difficult process.

Klaus Schulten runs his group in Beckman in such a way that mentoring is an important aspect. Junior people know that they can look to the more senior members for practical, day-to-day advice. Schulten will often ask senior graduate students or postdocs to help undergraduates there for a summer. And the group is full of computer engineers who are actually designing the software (like NAMD and VMD) that most of the members use to complete their calculations, and the developers are a great resource for software questions. Braun was grateful for the extensive guidance she received from Dorina Kosztin, a PhD student and later a postdoc at Beckman. “What he does very well,” says Braun of Schulten, “is facilitate a system where the people who have learned it can teach it in the nitty-gritty way to those who are just starting to learn it. We functioned as a family of sorts.”

In their simulations, Braun and Schulten found out that there was increased affinity for just one crystal plane, and not for other crystal planes. And the computational microscope revealed why: it had to do with the shape of the gold surface. When the peptide was placed flush on one of the other, non-preferred crystal faces, water started seeping in and impacting the interaction between the peptide and the gold surface. But they did not see water get between the peptide and the gold surface when they studied the preferred crystal face, the {111}. Hence, Braun and Schulten could present a detailed description of what Sarikaya was observing, and explain the underlying mechanism with the computational microscope. Little did Schulten realize that this project would become one of many in his new life as an investigator of bionanoengineering phenomena.

Nanopores for Sequencing DNA

“He was like a spiritual bulldog almost,” Klaus Schulten says of the man, Greg Timp, who really pushed Schulten full bore into the field of bionanotechnology. “Every week he wrote an inspirational email, usually with some story from literature, history, the Bible, and then he related it to the work going on here, in nanodevices.” While Schulten was inspired by his colleague Timp at Urbana to contribute to the new field of bionano-devices, there were a few other factors at work that convinced Schulten it would be a good idea to take his theoretical and computational methods into a realm they were not necessarily tailored for. That realm would be the combination of the “wet” biological materials with the “dry” nanotechnology. While Schulten had spent his whole professional life on the former, he was just about to delve into the latter in his upcoming research.

Alek Aksimentiev, a postdoc in Klaus Schulten's group from 2001-2005, who worked on DNA in nanopores.

Part of Schulten's taking the plunge into the nano plus bio admixture stems from his realization of an historical moment in science. While many consider the twentieth century to be the era of physics, now many prominent scientists are calling the twenty-first century the era of biology. “And this era,” Schulten explains, “like any major scientific era, is very often determined by not only pure science developments but also by pure technology developments.” For example, in the twentieth century, one only has to look at the transistor, which flowed from solid-state physics, and led to the computer revolution and all its accompanying technologies. Schulten sees the relatively new field of bioengineering as part of this Life Sciences Century, and one instance where pure science and technology go hand in hand. He saw for himself why technology needed to be combined with the life sciences. “The reason why it's really necessary,” Schulten says of bioengineering, “is that one needs to measure very small samples. And these electrical devices could be scaled smaller, smaller, smaller, to exactly the scale you need in biology.” As living systems are made of molecules, often in low concentrations as there are very few of the same ones in a whole cell, measurement needs to be extremely sensitive, routinely down to the single-molecule level. For this, new technologies need to be employed. And one of the ways to be extremely sensitive and act with very small probes is to utilize nanotechnologies. So not only did Schulten recognize the historical significance of the blossoming field of bionanoengineering, but he knew that the systems (biomolecule plus nanodevice) were so small he could in fact very well simulate the entire thing. He has always lived in his own “nano-village” as he once called it, simulating biological systems considered nano-sized, and these bionano-devices fit nicely into his village rubric.

Another factor at work in bringing Schulten into the field of bionanoengineering is that the University of Illinois at Urbana-Champaign houses the Micro and Nanotechnology Laboratory, and so is a strong presence in the field of bionanotechnology. While the Micro and Nanotechnology Laboratory does photonics and microelectronics, it also focuses on nanotechnology and biotechnology. Schulten was essentially steps away from this hub of cutting-edge research, and thus near experimentalists who were eager to collaborate with him. They could use his expertise to visualize their novel systems, and his work with these researchers from this laboratory will be covered below in detail.

Hence, for Schulten, the time was right, historically speaking, and his presence at Illinois also brought him into contact with experimentalists whose novel devices he could simulate. The first instance of Champaign-Urbana offering Schulten an experimentalist to work with was his collaboration with electrical engineer Greg Timp, the spiritual bulldog. When he started up this collaboration, in 2003, Schulten also had an excellent postdoc who was critical to the success of the mission to combine “wet” biological molecules with “dry” nanodevices in simulation. That postdoc was Aleksei Aksimentiev, and the system he and Schulten worked on from Greg Timp's lab was DNA in a nanopore.

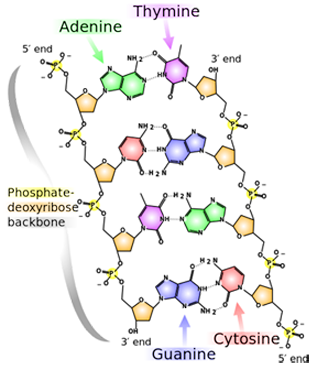

DNA is a fundamental molecule of life and it contains all the information to make a complete living entity, such as a human, plant, or bacterium. Thus DNA is considered hereditary material and is basically the storage information system for living organisms, much as a hard disk or a tape drive stores all the information for a computer. There are basically four letters of the DNA alphabet, and the sequence of those letters codes for genes. So the point of DNA sequencing is to unravel the order of the letters that code for each gene of a living entity. (The sum total of all the genes of a living entity is called its genome.) The ability to determine the sequence of a living system's genes has had huge implications in the fields of biology and medicine. Some of the areas most revolutionized by DNA sequencing are plant sciences, forensic biology, pharmacology, and genetic disease studies.

But DNA sequencing is not cheap, straightforward, or quick. However, great technological strides have been made, and the cost of sequencing an entire genome has gone from $95 million in 2001 to roughly $10,000 in 2011. Many people are working on alternative methods to determine the genetic code. One such method is to use a nanopore.

A nanopore is a tiny pore in a membrane; the diameter of the pore can go from half a nanometer to a few nanometers, and the length of the pore might vary from two to thirty nanometers. A nanopore can be made by nature, such as the channel created across a membrane in a cell, or manufactured in a laboratory, such as the pores Greg Timp made. In Timp's case, he drilled holes in a silicon nitride membrane with an electron beam, and perfected methods to make solid-state pores of various sizes.

The way nanopore sequencing works is the following: Immerse the nanopore in an electrolyte (which is just salty water, or water filled with ions) and apply a voltage difference. To get some idea of scale, if the nanopore were your hand and you were sitting on the 30th floor of a skyscraper, the electrodes would each be the size of a house, and a negative electrode would be on the 50th floor and a positive electrode on the 10th floor. The ions in the solution would begin to flow through the pore and create a steady state current that could be measured. Now if a strand of DNA were introduced into the system, an interesting thing happens. The DNA strand would find its way to the pore and then get sucked in and go through it. It is not necessary to actually bring the DNA to the pore. The DNA would partially block the current as it was going through the pore, and the crux is that the blockade current would be sensitive to each of the letters in the strand. Hence, by looking at the current profile, it would be possible to determine the sequence of the DNA strand. “So in this sense, this nanopore sequencing is analogous to a tape recorder,” says Aksimentiev. “You have a sequence written in the tape and you push it through the reader.”

A snippet of DNA, whose four bases are A, T, G, and C. Note the slight structural difference between the 3' end and the 5' end.

One of the most popular methods of DNA sequencing is called Sanger Sequencing. It takes help from biological enzymes that synthesize a complimentary strand to the one in question. “We are relying on biology to read the DNA sequence for us,” Aksimentiev notes. But nanopore technology would no longer need enzymes to manipulate the chemistry of the molecule. However, there are major hurdles with nanopore sequencing. The DNA code is written in atoms, as it is almost impossible to come up with anything smaller than atoms for storing a blueprint of a life form. Shown is an image of a bit of DNA with its four bases (adenine, thymine, guanine, cytosine, or A, T, G, C). The atoms that make up the bases are just carbons, nitrogens, oxygens, and hydrogens. Nanopore sequencing would have to distinguish between nucleotides, or bases, that just differ by a few atoms. “And that, if you think about it, is a really tall order,” says Aksimentiev, “because you are looking at really small differences, atomic scale differences, between very small objects, individual objects. Because we want to read out that sequence of DNA with single nucleotide precision, so for each nucleotide we want to have resolution.”

In 2003, Greg Timp was pursuing the idea of using solid-state nanopores to sequence DNA and wanted Schulten and Aksimentiev to visualize what he was measuring. Since the diameter of the pore was just a few nanometers wide, and the system immersed in liquid, neither an optical microscope nor an electron microscope could do the job of proper visualization of the DNA in motion through the pore. Schulten realized molecular dynamics could probably be used to describe the three-part system of electrolyte, DNA, and nanopore. To Schulten's knowledge, at that time in 2003 no other group had done similar simulations on these kinds of bioengineering platforms.

Today it perhaps seems sensible to get molecular dynamics into the bionano-game, but Schulten took a chance back then. “We could have fallen flat on our faces,” Schulten reiterates. “We had to learn a lot to do things right, gain more expertise, be patient and wait actually for several years until it caught on and people said: Oh, this is interesting.” Schulten explains that when characterizing nature computationally, artificial descriptions are often used, so when a totally new substance is added, things can easily go wrong. With a system of three components, if description of one component is significantly better than the other two, things get out of balance. Thus he and Aksimentiev had to be very careful with their novel system so that each component was described equally well.

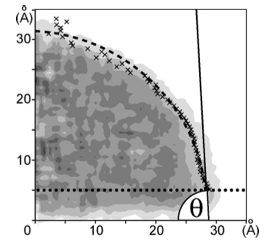

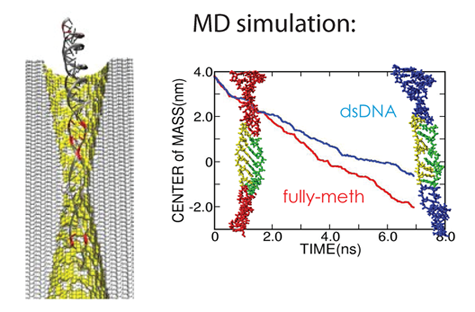

And they had some great successes in elucidating the translocation of DNA through the nanopores. One such success came from asking the simple question: What is the smallest pore DNA can snake through? It seems unlikely that a double-stranded DNA piece could fit through a pore whose diameter was smaller than the width of the double helix, but molecular dynamics predicted that it could. The reason is that the DNA gets stretched by the electric field in the very center of the pore. The behavior of the potential inside a pore is only something imaged with the computational microscope; the potential stays the same from one electrode to almost the middle of the pore, and then in the very middle it falls precipitously, and then outside the middle to the other electrode it resumes almost constant value. This behavior right at the center of the pore stretches DNA to almost twice its equilibrium length, decreasing its diameter so that translocation is possible. Molecular dynamics predicted critical pore radii and associated electric fields, which were all verified by experiment. This work that Schulten and Aksimentiev conducted with silicon nitride nanopores was not the only nanopore sequencing work they found themselves immersed in.

Imaging Alpha-Hemolysin: Precursor to Computational Microscope

At the same time Schulten and Aksimentiev were working with solid-state nanopores, they were conducting additional work on another membrane channel–this pore engineered by nature–which led to a very highly-cited paper, a publication Schulten claims is one of the best to ever come out of his group. And this 2005 article really compelled Schulten to later coin the simulations done by his group “the computational microscope.” But it all started with the word “imaging.”

The idea to use a nanopore to sequence DNA or RNA goes back to at least 1996, when a group of scientists (spearheaded by David Deamer and Daniel Branton) published a paper suggesting this novel use of a membrane channel, although the idea may even have percolated for years before the publication. The membrane channel they used was one found in nature, called alpha-hemolysin. Secreted by the bacterium Staphylococcus aureus are building blocks of alpha-hemolysin, which then form a channel in the membrane of a target cell. What results is the uncontrolled permeation of water, ions, and small organic molecules. The targeted cell also can lose its important molecules, as well as its steady potential gradient, and hence this eventually leads to cell death. But alpha-hemolysin, at the turn of the millennium, was recognized as a beautifully engineered transmembrane channel built by nature, so scientists started studying its properties, and then a subset of researchers actually started studying this channel as a potential candidate for DNA sequencing.

Alek Aksimentiev and Klaus Schulten designed a protocol to view the electric field inside a pore, an image only possible with the computational microscope.

Schulten and Aksimentiev wanted to characterize the properties of alpha-hemolysin embedded in a lipid membrane using molecular dynamics at this time, since its structure had been published and it was a hot topic in biology. But they conceived a clever twist: run a lengthy simulation and look at the average properties of the channel. “That was also much more reminiscent of what people know about the system,” says Schulten about their averaging, “than the constantly fluctuating alpha-hemolysin that you see in a molecular dynamics simulation. It's a very nervous kind of protein, with all kinds of tempers and conformational changes and so on. But on the average it behaves pretty reasonably.”

One of the most important properties they averaged was the electrostatic potential inside the channel. In every living cell there is a negative potential inside, and this potential is critical, as it drives many cellular processes. In the alpha-hemolysin channel all sorts of things flow across it, such as water, ions, DNA, and proteins. When experimentalists were studying alpha-hemolysin they would determine electric currents in the channel by applying external electric fields across the membrane. Schulten and Aksimentiev could reproduce these kinds of experimental studies (called patch clamp measurements) in their simulations. But then they presented a picture of the electrostatic field in these instances. To do this, they ran a long molecular dynamics simulation, and then averaged over the thermal motion that the channel undergoes, with all of its charges that contribute to the field. And in this way they envisioned the electric field inside the channel.

The innovation of this averaging stems from the fact that a biological cell is, electrostatically speaking, extremely noisy. It has ions that diffuse all the time; it has lipids with fluctuating head groups; it has dipole moments in its proteins. Schulten likens a snapshot of this potential to taking a picture of New York City in a rainstorm–you only see the rain, not so much the buildings. But if you take snapshots for two hours and then average, at the end you see the buildings and not so much the rain. Aksimentiev used the tools available in Schulten's group and some ingenuity of his own to introduce this averaging technique to the world. And it showed a beautiful result of the electrostatic potential–on both sides of the membrane, of the surface of the membrane, and also within the channel of the alpha-hemolysin. The result was a 2005 paper, “Imaging alpha-Hemolysin with Molecular Dynamics.” This paper is extremely highly cited, with 252 citations already and counting. The method Aksimentiev and Schulten brought to the scientific community would not have been possible earlier, as advances in NAMD and computer power aided the group in Beckman in 2005. Schulten says it really highlighted the resolving power of the computational microscope. And to his knowledge, this was the first time he used the word “imaging” in relation to molecular dynamics.

Here's a Test for You, Schulten

Klaus Schulten was certainly surprised to hear that if he wanted to work with experimentalist Amit Meller on alpha-hemolysin, first he had to pass a test. But as Meller explained the project in detail, Schulten grew more and more excited, for Schulten realized this “test” would be a great assessment of his life's work in molecular dynamics.

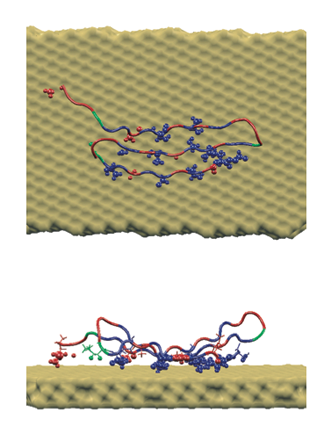

It was May of 2004 and Schulten was at a conference on nonlinear studies in Santa Fe, New Mexico. Amit Meller, then at the Rowland Institute in Massachusetts, was also at the conference and Schulten heard Meller's talk about DNA translocation experiments through alpha-hemolysin. Late one evening at the conference there was an after-talk and beer session, and Meller and Schulten got to chatting. Schulten revealed that he and Aleksei Aksimentiev had been working on simulations of alpha-hemolysin, and that Schulten would love to collaborate with Meller. Meller said he had been working on an experiment, which had a very clean result, but it presented him with a puzzle. He had been unable to explain this microscopically and had been wondering about the explanation of the conundrum quite a bit lately. The dilemma he faced was based on the fact that DNA is not the same from front to back. One end of it is called the 3' end, and the other is the 5' end (as is shown in the image above of the atomic structure of DNA). Meller and team were watching single-stranded DNA enter the nanopore, and in response they measured the residual current that resulted, which was about 10 to 20 percent of the steady state current. “We figured out a way to perform an experiment where we forced our molecules to enter the pore either on the 3' end or the 5' end,” Meller told Schulten that night. “And when we measured the residual current, we saw that there is a difference, and a substantial difference that we could characterize. It was that one of the ends was blocking the current more than the other.”

Moreover, he told Schulten, when they simply let the DNA molecule find its way to the pore and then escape, they found a substantial difference in the escape time for the 3' versus the 5' end. Meller says the reason that this was both surprising and puzzling was that the strands of DNA in his translocation experiments were just homopolymers, that is, 70 nucleotides of adenine (base A) right next to each other. When you flip it over the strands look exactly the same. “So it was quite mind-boggling to realize that nature knows to distinguish between those subtle differences between the entering 3' and 5' ends,” recollects Meller.

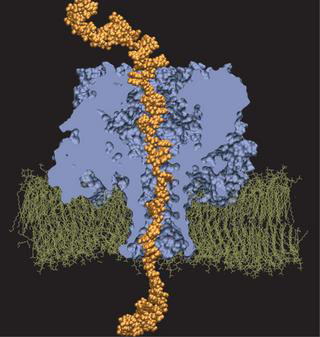

DNA permeation in alpha-hemolysin, as simulated by Alek Aksimentiev and Klaus Schulten.

So Meller clearly laid out to Schulten that there was a very clean and crisp experiment, but that the two distinct ends of DNA behaved differently in both the situations he described. However, he never told Schulten which end did what. There was a reason behind Meller's bold challenge: “But then, maybe I had an extra beer that evening,” he recounts. “I was still a junior faculty at that time, and Klaus was much more, I would say, senior than me.” He told Schulten that while he had a lot of respect for people doing computational experiments, Meller wanted to challenge Schulten. Meller wanted Aksimentiev and Schulten just to run the molecular dynamics simulations and get the results in a completely unbiased way. In other words, the theorists would run their calculations in a double-blind fashion. Meller asked Schulten to perform two tests. First, find out which end blocks the current more. And second, find out which end takes almost twice as much time to escape from the pore. He told Schulten that since there is a 50% chance to get the first question right, and a 50% chance to get the second question right, the overall chance to be correct randomly is just 25%. But if they did the computations correctly, then there would be a potentially good publication as a reward.

Meller did not know Schulten well at that time, and was unsure of how Schulten would react to being challenged like this. But Schulten grew very excited, according to Meller, and seemed to take no offense. And, in true Schulten fashion, even though it was late at night in the western United States and even later in the Midwest, Schulten called Aleksei Aksimentiev in Illinois to tell him to start the simulations immediately. Schulten confesses that he and Aksimentiev thought Meller was a tad crazy, and they thought maybe Meller wanted to trick them. But Aksimentiev did the simulations very carefully and got his answers about three months later.

The scientists in Illinois called Meller on the phone and reported their results. They were correct on both accounts! The 3' end produced the lower residual current, and the 3' end escaped from the pore more quickly than the 5' end. After further discussion, and further ingenious simulations by the Beckman team, the microscopic picture of what was going on was revealed. The answer can be explained by likening the DNA strand to a Christmas tree going through a door. Clearly, there is only one good way to bring that tree through a door. When the strand of DNA was confined in the alpha-hemolysin, the bases all tilted toward the 5' end. Therefore, the friction experienced by the 3' end versus the 5' end was different, and the 3' end acted like the upper tip of the Christmas tree when translocating through the pore. Meller also offers another analog: there is only one good way to pet a cat.

Meller was pleased to have a microscopic explanation of his experiments. And for Schulten and Aksimentiev, who in 2004 had only just embarked on simulations of DNA in nanopores, this was a great validation of the computational microscope, for their careful procedures clearly paid dividends. The team quickly wrote a paper, which also included authors Jéröme Mathé and David Nelson, the former the postdoc of Meller's who did the experiments and the latter who did some theoretical modeling. The result was a publication in the Proceedings of the National Academy of Sciences. This tale, of being challenged to do molecular dynamics in a double-blind manner, and not only getting the correct answers but actually finding the microscopic explanation to the phenomenon, left a big impression on Schulten. As he says, “this was one of the most wonderful stories, actually, in all of my molecular dynamics life.”

Carbon Nanotubes: It's all about Polarization

Graduate student Deyu Lu had a challenging task. With a few studies on membrane proteins and light harvesting under his belt already as a graduate student of Schulten's, he needed to find a project suitable for and worthy of a dissertation. Lu was to present his grand idea to Schulten, and then Schulten said he would give it a grade, A, B, C, etc. Two things simultaneously converged at this time, circa 2002, which earned a grade of A+ from Schulten for Lu's idea.

Unbeknownst to Lu, his assignment of a TA office would have major implications for his future. Right next door to Lu's office sat another physics graduate student, Yan Li. As chance would have it, the two had met once before in China, at a meet-and-greet for students who all were headed abroad to study in Urbana. But with their offices right next door, they really started to get to know each other by doing homework together. Li, while also in the physics department, had chosen to work with electrical engineer Umberto Ravaioli, as her first foray in an experimental physics lab had taught her that she was too clumsy for what was required in that setting. So she realized she needed to find a theorist to work with. Since the theoretical physicist she initially wanted to work with was on sabbatical, she eventually found Ravaioli, who was doing theoretical material science, a field she really wanted to pursue.

Now that Li was settled into a theory group, she began to immerse herself in the literature about carbon nanotubes, the topic her advisor thought would make a great dissertation project. To visualize a carbon nanotube, imagine a flat sheet of honeycomb, and placed at each of the apexes of the hexagons that make up the honeycomb are carbon atoms. Now roll that 2D sheet into a cylinder, and you have the nanotube, whose diameter is anywhere from 1 to 50 nanometers wide. Discovered in 1991, these nanotubes have a host of interesting properties and, a decade later, there were a flood of papers exploring their many fascinating aspects. It was just at this time, when there was much excitement about carbon nanotubes, that Yan Li started investigating them. Her advisor suggested she might study the metal-to-semiconducting transition. Roughly speaking, depending on the structure of the nanotube, it can be either metallic or semiconducting. So Li was just at the beginning stages of her dissertation.

Deyu Lu and Yan Li in 2006, at the UIUC Quad. Photo used with permission from Yan Li.

By this time Deyu Lu and Yan Li had started dating. While Li was pretty sure her dissertation topic would be carbon nanotubes, Lu was still looking for his project idea. Although Lu was by now working in Schulten's group, and as such, doing biophysics-related projects, he had gotten a master's degree in condensed matter physics back in China, and was very interested still in this field. In fact, he often talked to his girlfriend about her carbon nanotube headway. And he grew more and more fascinated by carbon nanotubes. One day he came upon a paper in the journal Nature, reporting that water actually conducts through a nanotube, as was shown in molecular dynamics calculations done by the authors. “I think this is probably the first work that I saw that related carbon nanotubes to some kind of biological environment,” notes Lu. “So I was amazed by the story there.”

Lu started thinking longer and harder about carbon nanotubes at that point. As he had training in condensed matter physics, where proper description of electrons is paramount, he started to think about polarization and its role in the water-nanotube interaction. Polarizability is essentially related to the degree of distortion of an electron cloud when near some kind of charge. Imagining again the honeycomb basis of the nanotube, at each apex the carbon atom is bonded to three other carbon atoms. But carbon has four valence electrons, so at each apex one electron is more or less itinerant. Looking at the whole nanotube now instead of just the individual carbon atoms comprising it, these roaming electrons can form clouds that distort if an electric field is applied; this is one example of how carbon nanotubes can become polarized. But polarizability is also possible if the nanotube were filled with water molecules, which have dipole moments. Lu started wondering about how to give a proper description of the electronic behavior, how to characterize polarizability, and what might be the interaction between water and the nanotube. “So when I started to ask myself those questions, I felt this could be a very promising direction for me to explore,” Lu explains.

During this whole time, Lu was consulting with Yan Li, and they spent considerable time discussing carbon nanotubes, and they bounced ideas off each other. They finally settled on a methodology to use to characterize carbon nanotubes and their polarizability. The next step was to not only propose their idea to their advisors, but also see if they could sell a collaboration between the two groups. This was before Schulten had really immersed himself in the field of bionanotechnology, but Lu reports that Schulten gave him an energetic thumbs-up for the proposed project, and, in the Beckman fashion, was happy to consider a collaboration with Ravaioli. While this collaboration turned out to be very fruitful publication-wise, it also led to other profound realms. Yan Li and Deyu Lu married at the end of 2003.

The overall goal of the study was to look at polarization and then also do some simple molecular dynamics calculations. To understand the polarization, the couple needed to employ an electronic structure method, which, as its name suggests, looks at the electrons of the system to calculate its properties. Electronic structure methods are quantum mechanical, while molecular dynamics is considered classical. Deyu Lu says they settled on the method called tight binding because it was simple enough; one can do lots there with just pen and paper, but it also provides adequate physical insight. While using tight binding may sound straightforward, Schulten is clear to point out that what Lu and Li accomplished was quite a feat, using that level of theory to describe nanotubes. Schulten is quite proud of what was accomplished.

In fact, the couple had to contend with challenges at every level. There was a senior postdoc in Ravaioli's group who did provide lots of advice and help, Slava Rotkin, and Rotkin is even on some papers, but mainly Lu and Li had to figure out everything on their own. Lu says a big source of help to him was the slim book, Physical Properties of Carbon Nanotubes, by Saito, Dresselhaus, and Dresselhaus, which he read cover to cover. Schulten sees the constellation of personalities and groups as a key reason for the success of the nanotube work. “This was one of the really clear cases where it was proven that how well a group does with a project depends very often on the particular personality, giftedness, and ability of an individual,” says Schulten. “And it's not like the group can do anything they want.” Yan Li says she and her husband understood each other very well, and that their similar backgrounds–undergraduate physics majors, same class in graduate school–also played key roles. While the reasons for their success are numerous, it seems clear that the couple brought out the best science in each other.

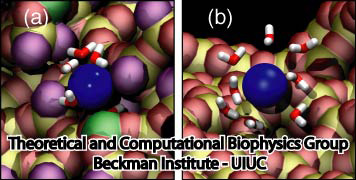

An ion in a carbon nanotube. Once it gets sucked in, the ion oscillates as shown.

Perhaps the highlight of the carbon nanotube study was an exciting observation Lu made, which resulted in a paper in Physical Review Letters, a premiere journal for solid state physics research. In order to see the polarization in a carbon nanotube, a source of external charge needs to be supplied. At the beginning of the work, Lu and Li subjected the nanotube to an electric field, then filled it with water. Deyu Lu started to wonder what else he could do to elucidate the polarizability, for at that point he had written his own simple molecular dynamics code and had examined the water-nanotube system. And then it occurred to him: why not use an ion. He knew that a single ion was a simple enough system to incorporate into his existing molecular dynamics code. He also realized that since water is neutral, he should see an even bigger effect on the polarization if he used an ion.

Deyu Lu can still remember the day he tried his idea out. He put a potassium ion outside the carbon nanotube, and ran his program. The ion was sucked into the tube. Not only that, but then it started to oscillate! The ion couldn't get back out of the tube, a consequence of energy conservation. Lu immediately ran to Schulten's office to tell him. The extremely positive response he got from his advisor was unexpected. Schulten was thrilled. He told Lu that physicists love to see an oscillator, and that Lu and Li should expound on this result and dig deeper. The outcome was a 2005 publication, “Ion-Nanotube Terahertz Oscillator.” Schulten claims it is probably one of the best papers ever to come out of his Beckman group. For Deyu Lu, his project on the carbon nanotubes had lasting implications for his future. Recall, at this point in time, Schulten had not fully immersed himself in nano-engineering projects. Lu feels fortunate that Schulten allowed him to do something slightly different than most of the other research projects in the Theoretical and Computational Biophysics Group at Beckman. “In that regard, Klaus gave me his whole support and just let me do the thing I liked,” says Lu. “And I really feel fortunate for that situation.” But most of all, it fashioned the way he approaches research, and developed his independent thinking, skills that Lu says he relies on to this day.

Nanodiscs–But How Do They Really Look

While Klaus Schulten admits he did not have a structured plan that he followed in order to acquire new projects in nano-engineering, his mere presence as a faculty member at the University of Illinois–a large school–offered him a cornucopia of collaborations in exactly this field. When Schulten was approached by a colleague seeking help in visualizing this biochemist's new brainchild–a type of nanoparticle people thought he was nuts for developing–Schulten realized the computational microscope was a perfect tool for the job. This resulted in one of Schulten's best efforts in bionanoengineering, and is really a concerted University of Illinois effort, from its earliest beginnings to the present.

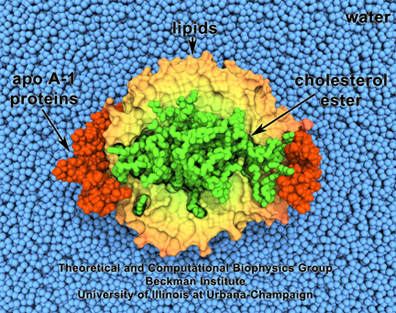

HDL, or good cholesterol. Lipids are surrounded by proteins, and enclose cholesterol.

Steve Sligar, a biochemist at Champaign-Urbana, had acquired an atomic force microscope in the 1990s and little did he know that, in looking for candidates to image with his new machine, it would lead to creation of what he dubbed a “nanodisc.” One of the particles he started studying was a lipoprotein, inspired by pioneering work begun at Urbana by Ana Jonas. Starting in the 1980s, Jonas had been studying the atherosclerotic process and the role of lipoproteins. Most people are aware of lipoproteins as it relates to human health, that good cholesterol, or HDL (high density lipoprotein), circulates in the blood collecting cholesterol in the body. Jonas actually fabricated HDL particles in her lab. She even persuaded Schulten to help her visualize these reconstituted particles in a project they worked on in 1997. HDL look like orbs made of lipid particles, with, for example, cholesterol inside, and a protein on the outside sort of binding the whole thing together, as seen in the image.

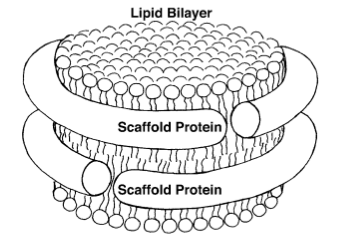

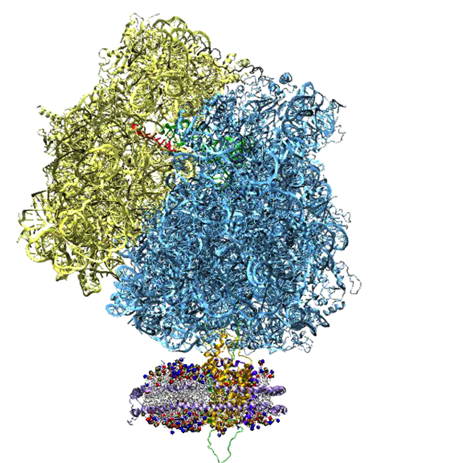

Sligar and his team started making their own nanoparticles in the lab, reminiscent of Jonas's HDL particles. Sligar then genetically engineered various proteins (called apolipoproteins, or more instructively, membrane scaffold proteins) to form around the tiny bits of lipids. When the membrane scaffold proteins and the lipids were thrown together, they assembled themselves into structures that Sligar decided to name “nanodiscs.” Then he had a “wild idea,” according to an historical article he penned about nanodiscs, published in 2013. Membrane proteins, as their name would suggest, live in membranes, and studying these proteins has presented immense challenges to researchers. This is because outside of the membrane, the proteins become inactive and die. As mentioned above, in 1988 a Nobel Prize was awarded for actually isolating a membrane protein on its own and then forming crystals of it suitable for structure determination. The Stockholm award speaks to the challenges of working with membrane proteins. At this point, around the dawn of the new millennium, Sligar was also working with membrane proteins in his lab and growing frustrated. So his “wild idea” was to make a mixture of scaffold protein, lipid, and the membrane protein, and hope that in the process of self-assembly, the membrane protein would find itself a natural home inside the lipids. It worked! So Sligar then decided to hone the nanodisc assembly process, and provide the recipe for others wanting to study membrane proteins in their natural environment. In 2013, he offered an historical overview of the reactions to his initial nanodisc idea:

While the public response to this bio-assembly system was initially somewhat muted due to concerns that deeply embedded and totally integral membrane proteins would not readily be incorporated, subsequent efforts over the next decade (including that by many independent laboratories) demonstrated the broad applicability of nanodiscs for studying the breadth and complexity of membrane protein complexes.