#include <ComputePme.h>

Public Member Functions | |

| ComputePme (ComputeID c, PatchID pid) | |

| virtual | ~ComputePme () |

| void | initialize () |

| void | atomUpdate () |

| int | noWork () |

| void | doWork () |

| void | doQMWork () |

| void | ungridForces () |

| void | setMgr (ComputePmeMgr *mgr) |

Public Member Functions inherited from Compute Public Member Functions inherited from Compute | |

| Compute (ComputeID) | |

| int | type () |

| virtual | ~Compute () |

| void | setNumPatches (int n) |

| int | getNumPatches () |

| virtual void | patchReady (PatchID, int doneMigration, int seq) |

| virtual void | finishPatch (int) |

| int | sequence (void) |

| int | priority (void) |

| int | getGBISPhase (void) |

| virtual void | gbisP2PatchReady (PatchID, int seq) |

| virtual void | gbisP3PatchReady (PatchID, int seq) |

Public Member Functions inherited from ComputePmeUtil Public Member Functions inherited from ComputePmeUtil | |

| ComputePmeUtil () | |

| ~ComputePmeUtil () | |

Friends | |

| class | ComputePmeMgr |

Additional Inherited Members | |

Static Public Member Functions inherited from ComputePmeUtil Static Public Member Functions inherited from ComputePmeUtil | |

| static void | select (void) |

Public Attributes inherited from Compute Public Attributes inherited from Compute | |

| const ComputeID | cid |

| LDObjHandle | ldObjHandle |

| LocalWorkMsg *const | localWorkMsg |

Static Public Attributes inherited from ComputePmeUtil Static Public Attributes inherited from ComputePmeUtil | |

| static int | numGrids |

| static Bool | alchOn |

| static Bool | alchFepOn |

| static Bool | alchThermIntOn |

| static Bool | alchDecouple |

| static BigReal | alchElecLambdaStart |

| static Bool | lesOn |

| static int | lesFactor |

| static Bool | pairOn |

| static Bool | selfOn |

| static Bool | LJPMEOn |

Protected Member Functions inherited from Compute Protected Member Functions inherited from Compute | |

| void | enqueueWork () |

Protected Attributes inherited from Compute Protected Attributes inherited from Compute | |

| int | computeType |

| int | basePriority |

| int | gbisPhase |

| int | gbisPhasePriority [3] |

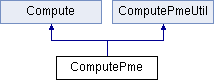

Detailed Description

Definition at line 48 of file ComputePme.h.

Constructor & Destructor Documentation

◆ ComputePme()

Definition at line 2712 of file ComputePme.C.

References Compute::basePriority, DebugM, PmeGrid::dim2, PmeGrid::dim3, PmeGrid::K1, PmeGrid::K2, PmeGrid::K3, ComputePmeUtil::numGrids, Node::Object(), PmeGrid::order, PME_PRIORITY, Compute::setNumPatches(), Node::simParameters, and simParams.

◆ ~ComputePme()

|

virtual |

Definition at line 2985 of file ComputePme.C.

Member Function Documentation

◆ atomUpdate()

|

virtual |

Reimplemented from Compute.

Definition at line 2710 of file ComputePme.C.

◆ doQMWork()

| void ComputePme::doQMWork | ( | ) |

Definition at line 3078 of file ComputePme.C.

References doWork(), Molecule::get_numQMAtoms(), Molecule::get_qmAtmChrg(), Molecule::get_qmAtmIndx(), Molecule::get_qmAtomGroup(), Patch::getCompAtomExtInfo(), Patch::getNumAtoms(), Node::molecule, and Node::Object().

◆ doWork()

|

virtual |

Reimplemented from Compute.

Definition at line 3136 of file ComputePme.C.

References ComputePmeMgr::a_data_dev, ComputePmeMgr::a_data_host, ComputePmeUtil::alchDecouple, ComputePmeUtil::alchOn, Compute::basePriority, ResizeArray< Elem >::begin(), PmeParticle::cg, CompAtom::charge, ComputePmeMgr::chargeGridReady(), Compute::cid, Box< Owner, Data >::close(), COMPUTE_HOME_PRIORITY, COULOMB, ComputePmeMgr::cuda_atoms_alloc, ComputePmeMgr::cuda_atoms_count, ComputePmeMgr::cuda_busy, cuda_errcheck(), ComputePmeMgr::cuda_lock, ComputePmeMgr::cuda_submit_charges(), ComputePmeMgr::cuda_submit_charges_deque, DebugM, deviceCUDA, ComputeNonbondedUtil::dielectric_1, CompAtomExt::dispcoef, Flags::doMolly, ComputeNonbondedUtil::ewaldcof, PmeRealSpace::fill_charges(), Patch::flags, Patch::getCompAtomExtInfo(), DeviceCUDA::getDeviceID(), DeviceCUDA::getMasterPe(), Patch::getNumAtoms(), PmeGrid::K1, PmeGrid::K2, PmeGrid::K3, Flags::lattice, ComputePmeMgr::cuda_submit_charges_args::lattice, ComputePmeUtil::lesOn, ComputeNonbondedUtil::LJewaldcof, ComputePmeUtil::LJPMEOn, ComputePmeMgr::cuda_submit_charges_args::mgr, NAMD_bug(), ComputePmeUtil::numGrids, Box< Owner, Data >::open(), PmeGrid::order, ComputePmeUtil::pairOn, CompAtom::partition, PATCH_PRIORITY, PME_OFFLOAD_PRIORITY, PME_PRIORITY, ComputePmeMgr::pmeComputes, CompAtom::position, ResizeArray< Elem >::resize(), scale_coordinates(), ComputeNonbondedUtil::scaling, ComputePmeUtil::selfOn, Compute::sequence(), ComputePmeMgr::cuda_submit_charges_args::sequence, PmeRealSpace::set_num_atoms(), ResizeArray< Elem >::size(), SQRT_PI, ComputePmeMgr::submitReductions(), TRACE_COMPOBJ_IDOFFSET, ungridForces(), PmeParticle::x, Vector::x, PmeParticle::y, Vector::y, PmeParticle::z, and Vector::z.

Referenced by doQMWork().

◆ initialize()

|

virtual |

Reimplemented from Compute.

Definition at line 2751 of file ComputePme.C.

References ComputePmeMgr::cuda_atoms_count, Patch::getNumAtoms(), NAMD_bug(), PatchMap::Object(), Patch::registerAvgPositionPickup(), Patch::registerForceDeposit(), and Patch::registerPositionPickup().

◆ noWork()

|

virtual |

Reimplemented from Compute.

Definition at line 3039 of file ComputePme.C.

References ResizeArray< Elem >::add(), Flags::doFullElectrostatics, Patch::flags, ComputePmeMgr::pmeComputes, ResizeArray< Elem >::size(), Box< Owner, Data >::skip(), and SubmitReduction::submit().

◆ setMgr()

|

inline |

Definition at line 58 of file ComputePme.h.

◆ ungridForces()

| void ComputePme::ungridForces | ( | ) |

Definition at line 4090 of file ComputePme.C.

References ADD_VECTOR_OBJECT, ComputePmeUtil::alchDecouple, ComputePmeUtil::alchFepOn, ComputePmeUtil::alchOn, ResizeArray< Elem >::begin(), Box< Owner, Data >::close(), PmeRealSpace::compute_forces(), endi(), Results::f, Patch::flags, Patch::getNumAtoms(), iERROR(), iout, Flags::lattice, ComputePmeUtil::lesFactor, ComputePmeUtil::lesOn, ComputePmeUtil::LJPMEOn, NAMD_bug(), ComputePmeUtil::numGrids, Node::Object(), Box< Owner, Data >::open(), ComputePmeUtil::pairOn, ResizeArray< Elem >::resize(), scale_forces(), ComputePmeUtil::selfOn, Compute::sequence(), Node::simParameters, simParams, Results::slow, Flags::step, Vector::x, Vector::y, and Vector::z.

Referenced by doWork().

Friends And Related Function Documentation

◆ ComputePmeMgr

|

friend |

Definition at line 60 of file ComputePme.h.

The documentation for this class was generated from the following files:

1.8.14

1.8.14