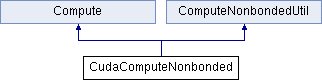

#include <CudaComputeNonbonded.h>

Classes | |

| struct | ComputeRecord |

| struct | PatchRecord |

Detailed Description

Definition at line 31 of file CudaComputeNonbonded.h.

Constructor & Destructor Documentation

◆ CudaComputeNonbonded()

| CudaComputeNonbonded::CudaComputeNonbonded | ( | ComputeID | c, |

| int | deviceID, | ||

| CudaNonbondedTables & | cudaNonbondedTables, | ||

| bool | doStreaming | ||

| ) |

Definition at line 39 of file CudaComputeNonbonded.C.

References cudaCheck, Compute::gbisPhase, NAMD_die(), Node::Object(), Node::simParameters, and simParams.

◆ ~CudaComputeNonbonded()

| CudaComputeNonbonded::~CudaComputeNonbonded | ( | ) |

Definition at line 119 of file CudaComputeNonbonded.C.

References cudaCheck, deallocate_host(), and ComputeMgr::sendUnregisterBoxesOnPe().

Member Function Documentation

◆ assignPatches()

| void CudaComputeNonbonded::assignPatches | ( | ComputeMgr * | computeMgrIn | ) |

Definition at line 397 of file CudaComputeNonbonded.C.

References PatchMap::basePatchIDList(), deviceCUDA, findHomePatchPe(), findProxyPatchPes(), DeviceCUDA::getDeviceCount(), DeviceCUDA::getMasterPeForDeviceID(), Compute::getNumPatches(), DeviceCUDA::getNumPesSharingDevice(), DeviceCUDA::getPesSharingDevice(), ComputePmeCUDAMgr::isPmePe(), NAMD_bug(), PatchMap::Object(), ComputePmeCUDAMgr::Object(), PatchMap::ObjectOnPe(), ComputeMgr::sendAssignPatchesOnPe(), and Compute::setNumPatches().

Referenced by ComputeMgr::createComputes().

◆ assignPatchesOnPe()

| void CudaComputeNonbonded::assignPatchesOnPe | ( | ) |

Definition at line 335 of file CudaComputeNonbonded.C.

References ResizeArray< Elem >::add(), NAMD_bug(), PatchMap::node(), PatchMap::Object(), and ResizeArray< Elem >::size().

Referenced by ComputeMgr::recvAssignPatchesOnPe().

◆ atomUpdate()

|

virtual |

Reimplemented from Compute.

Definition at line 702 of file CudaComputeNonbonded.C.

◆ doWork()

|

virtual |

Reimplemented from Compute.

Definition at line 1184 of file CudaComputeNonbonded.C.

References SimParameters::CUDASOAintegrate, Flags::doEnergy, Flags::doFullElectrostatics, Flags::doMinimize, Flags::doNonbonded, Flags::doVirial, Compute::gbisPhase, NAMD_bug(), Node::Object(), openBoxesOnPe(), CudaTileListKernel::prepareBuffers(), ComputeMgr::sendOpenBoxesOnPe(), Node::simParameters, and simParams.

◆ finishPatches()

| void CudaComputeNonbonded::finishPatches | ( | ) |

Definition at line 1963 of file CudaComputeNonbonded.C.

References cudaCheck, SimParameters::CUDASOAintegrate, finishPatchesOnPe(), and ComputeMgr::sendFinishPatchesOnPe().

◆ finishPatchesOnPe()

| void CudaComputeNonbonded::finishPatchesOnPe | ( | ) |

Definition at line 1951 of file CudaComputeNonbonded.C.

Referenced by finishPatches(), and ComputeMgr::recvFinishPatchesOnPe().

◆ finishPatchOnPe()

| void CudaComputeNonbonded::finishPatchOnPe | ( | int | i | ) |

Definition at line 1958 of file CudaComputeNonbonded.C.

Referenced by ComputeMgr::recvFinishPatchOnPe().

◆ finishReductions()

| void CudaComputeNonbonded::finishReductions | ( | ) |

Definition at line 1704 of file CudaComputeNonbonded.C.

References ADD_TENSOR_OBJECT, cudaCheck, VirialEnergy::energyElec, VirialEnergy::energyElec_s, VirialEnergy::energyElec_ti_1, VirialEnergy::energyElec_ti_2, VirialEnergy::energyGBIS, VirialEnergy::energySlow, VirialEnergy::energySlow_s, VirialEnergy::energySlow_ti_1, VirialEnergy::energySlow_ti_2, VirialEnergy::energyVdw, VirialEnergy::energyVdw_s, VirialEnergy::energyVdw_ti_1, VirialEnergy::energyVdw_ti_2, SimParameters::GBISOn, getCurrentReduction(), CudaTileListKernel::getNumExcluded(), SubmitReduction::item(), NAMD_bug(), REDUCTION_COMPUTE_CHECKSUM, REDUCTION_ELECT_ENERGY, REDUCTION_ELECT_ENERGY_F, REDUCTION_ELECT_ENERGY_SLOW, REDUCTION_ELECT_ENERGY_SLOW_F, REDUCTION_ELECT_ENERGY_SLOW_TI_1, REDUCTION_ELECT_ENERGY_SLOW_TI_2, REDUCTION_ELECT_ENERGY_TI_1, REDUCTION_ELECT_ENERGY_TI_2, REDUCTION_EXCLUSION_CHECKSUM_CUDA, REDUCTION_LJ_ENERGY, REDUCTION_LJ_ENERGY_F, REDUCTION_LJ_ENERGY_TI_1, REDUCTION_LJ_ENERGY_TI_2, SubmitReduction::submit(), VirialEnergy::virial, VirialEnergy::virialSlow, Tensor::xx, Tensor::xy, Tensor::xz, Tensor::yx, Tensor::yy, Tensor::yz, Tensor::zx, Tensor::zy, and Tensor::zz.

Referenced by ComputeMgr::recvFinishReductions().

◆ gbisP2PatchReady()

|

virtual |

Reimplemented from Compute.

Definition at line 277 of file CudaComputeNonbonded.C.

References Compute::gbisP2PatchReady().

◆ gbisP3PatchReady()

|

virtual |

Reimplemented from Compute.

Definition at line 283 of file CudaComputeNonbonded.C.

References Compute::gbisP3PatchReady().

◆ getCurrentReduction()

| SubmitReduction * CudaComputeNonbonded::getCurrentReduction | ( | ) |

Definition at line 2425 of file CudaComputeNonbonded.C.

References Node::Object(), Node::simParameters, and simParams.

Referenced by finishReductions(), and launchWork().

◆ getDoTable()

|

static |

◆ getNonbondedCoef()

|

static |

Definition at line 2377 of file CudaComputeNonbonded.C.

References ComputeNonbondedUtil::c1, ComputeNonbondedUtil::cutoff, ComputeNonbondedUtil::cutoff2, CudaNBConstants::e_0, CudaNBConstants::e_0_slow, CudaNBConstants::e_1, CudaNBConstants::e_2, CudaNBConstants::ewald_0, CudaNBConstants::ewald_1, CudaNBConstants::ewald_2, CudaNBConstants::ewald_3_slow, ComputeNonbondedUtil::ewaldcof, CudaNBConstants::lj_0, CudaNBConstants::lj_1, CudaNBConstants::lj_2, CudaNBConstants::lj_3, CudaNBConstants::lj_4, CudaNBConstants::lj_5, ComputeNonbondedUtil::pi_ewaldcof, simParams, CudaNBConstants::slowScale, ComputeNonbondedUtil::switchOn, and ComputeNonbondedUtil::switchOn2.

◆ getPatches()

|

inline |

Definition at line 329 of file CudaComputeNonbonded.h.

◆ initialize()

|

virtual |

Reimplemented from Compute.

Definition at line 642 of file CudaComputeNonbonded.C.

References ATOMIC_BINS, cudaCheck, SimParameters::CUDASOAintegrateMode, deviceCUDA, SimParameters::drudeNbtholeCut, SimParameters::drudeOn, DeviceCUDA::getDeviceIndex(), PatchMap::numPatches(), PatchMap::Object(), Node::Object(), ReductionMgr::Object(), Compute::priority(), REDUCTIONS_BASIC, REDUCTIONS_GPURESIDENT, Node::simParameters, and ReductionMgr::willSubmit().

Referenced by ComputeMgr::createComputes().

◆ launchWork()

| void CudaComputeNonbonded::launchWork | ( | ) |

Definition at line 1248 of file CudaComputeNonbonded.C.

References CudaComputeNonbonded::PatchRecord::atomStart, ResizeArray< Elem >::begin(), cudaCheck, ComputeNonbondedUtil::cutoff, ResizeArray< Elem >::end(), Patch::flags, Compute::gbisPhase, getCurrentReduction(), CudaTileListKernel::getEmptyPatches(), CudaTileListKernel::getNumEmptyPatches(), CudaTileListKernel::getNumPatches(), Patch::getPatchID(), CudaComputeNonbondedKernel::getPatchReadyQueue(), PatchMap::homePatchList(), SubmitReduction::item(), NAMD_bug(), NAMD_EVENT_START, NAMD_EVENT_STOP, CudaComputeNonbonded::PatchRecord::numAtoms, PatchMap::Object(), Node::Object(), HomePatchElem::patch, CudaComputeNonbondedKernel::reduceVirialEnergy(), REDUCTION_PAIRLIST_WARNINGS, reSortTileLists(), Flags::savePairlists, Node::simParameters, simParams, Flags::step, and Flags::usePairlists.

Referenced by openBoxesOnPe(), and ComputeMgr::recvLaunchWork().

◆ messageEnqueueWork()

| void CudaComputeNonbonded::messageEnqueueWork | ( | ) |

Definition at line 1097 of file CudaComputeNonbonded.C.

References WorkDistrib::messageEnqueueWork(), and NAMD_bug().

Referenced by ComputeMgr::recvMessageEnqueueWork().

◆ noWork()

|

virtual |

Reimplemented from Compute.

Definition at line 1149 of file CudaComputeNonbonded.C.

References ComputeMgr::sendMessageEnqueueWork().

◆ openBoxesOnPe()

| void CudaComputeNonbonded::openBoxesOnPe | ( | ) |

Definition at line 1103 of file CudaComputeNonbonded.C.

References SimParameters::CUDASOAintegrate, Compute::getNumPatches(), launchWork(), NAMD_bug(), NAMD_EVENT_START, NAMD_EVENT_STOP, Node::Object(), and ComputeMgr::sendLaunchWork().

Referenced by doWork(), and ComputeMgr::recvOpenBoxesOnPe().

◆ patchReady()

|

virtual |

Reimplemented from Compute.

Definition at line 260 of file CudaComputeNonbonded.C.

References SimParameters::CUDASOAintegrate, NAMD_bug(), Compute::patchReady(), and SimParameters::useDeviceMigration.

◆ registerComputePair()

Definition at line 198 of file CudaComputeNonbonded.C.

References PatchMap::center(), Compute::cid, PatchMap::Object(), Vector::x, Vector::y, and Vector::z.

◆ registerComputeSelf()

◆ reSortTileLists()

| void CudaComputeNonbonded::reSortTileLists | ( | ) |

Definition at line 2018 of file CudaComputeNonbonded.C.

References cudaCheck, Node::Object(), CudaTileListKernel::reSortTileLists(), Node::simParameters, and simParams.

Referenced by launchWork().

◆ skipPatchesOnPe()

| void CudaComputeNonbonded::skipPatchesOnPe | ( | ) |

Definition at line 814 of file CudaComputeNonbonded.C.

References Compute::getNumPatches(), NAMD_bug(), and ComputeMgr::sendFinishReductions().

Referenced by ComputeMgr::recvSkipPatchesOnPe().

◆ unregisterBoxesOnPe()

| void CudaComputeNonbonded::unregisterBoxesOnPe | ( | ) |

Definition at line 176 of file CudaComputeNonbonded.C.

References NAMD_bug().

Referenced by ComputeMgr::recvUnregisterBoxesOnPe().

◆ updatePatchOrder()

| void CudaComputeNonbonded::updatePatchOrder | ( | const std::vector< CudaLocalRecord > & | data | ) |

Definition at line 613 of file CudaComputeNonbonded.C.

The documentation for this class was generated from the following files:

Public Member Functions inherited from

Public Member Functions inherited from  1.8.14

1.8.14