#include <CudaPmeSolverUtil.h>

Public Member Functions | |

| CudaPmeRealSpaceCompute (PmeGrid pmeGrid, const int jblock, const int kblock, int deviceID, cudaStream_t stream) | |

| ~CudaPmeRealSpaceCompute () | |

| void | copyAtoms (const int numAtoms, const CudaAtom *atoms) |

| void | spreadCharge (Lattice &lattice) |

| void | gatherForce (Lattice &lattice, CudaForce *force) |

| void | gatherForceSetCallback (ComputePmeCUDADevice *devicePtr_in) |

| void | waitGatherForceDone () |

Public Member Functions inherited from PmeRealSpaceCompute Public Member Functions inherited from PmeRealSpaceCompute | |

| PmeRealSpaceCompute (PmeGrid pmeGrid, const int jblock, const int kblock, unsigned int grid=0) | |

| virtual | ~PmeRealSpaceCompute () |

| float * | getData () |

| int | getDataSize () |

| void | setGrid (unsigned int i) |

Additional Inherited Members | |

Static Public Member Functions inherited from PmeRealSpaceCompute Static Public Member Functions inherited from PmeRealSpaceCompute | |

| static double | calcGridCoord (const double x, const double recip11, const int nfftx) |

| static void | calcGridCoord (const double x, const double y, const double z, const double recip11, const double recip22, const double recip33, const int nfftx, const int nffty, const int nfftz, double &frx, double &fry, double &frz) |

| static void | calcGridCoord (const float x, const float y, const float z, const float recip11, const float recip22, const float recip33, const int nfftx, const int nffty, const int nfftz, float &frx, float &fry, float &frz) |

| static void | calcGridCoord (const float x, const float y, const float z, const int nfftx, const int nffty, const int nfftz, float &frx, float &fry, float &frz) |

| static void | calcGridCoord (const double x, const double y, const double z, const int nfftx, const int nffty, const int nfftz, double &frx, double &fry, double &frz) |

Protected Attributes inherited from PmeRealSpaceCompute Protected Attributes inherited from PmeRealSpaceCompute | |

| int | numAtoms |

| PmeGrid | pmeGrid |

| int | y0 |

| int | z0 |

| int | xsize |

| int | ysize |

| int | zsize |

| int | dataSize |

| float * | data |

| const int | jblock |

| const int | kblock |

| unsigned int | multipleGridIndex |

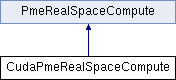

Detailed Description

Definition at line 112 of file CudaPmeSolverUtil.h.

Constructor & Destructor Documentation

◆ CudaPmeRealSpaceCompute()

| CudaPmeRealSpaceCompute::CudaPmeRealSpaceCompute | ( | PmeGrid | pmeGrid, |

| const int | jblock, | ||

| const int | kblock, | ||

| int | deviceID, | ||

| cudaStream_t | stream | ||

| ) |

Definition at line 545 of file CudaPmeSolverUtil.C.

References cudaCheck, PmeRealSpaceCompute::data, PmeRealSpaceCompute::dataSize, NAMD_bug(), PmeRealSpaceCompute::xsize, PmeRealSpaceCompute::ysize, and PmeRealSpaceCompute::zsize.

◆ ~CudaPmeRealSpaceCompute()

| CudaPmeRealSpaceCompute::~CudaPmeRealSpaceCompute | ( | ) |

Definition at line 570 of file CudaPmeSolverUtil.C.

References cudaCheck, and PmeRealSpaceCompute::data.

Member Function Documentation

◆ copyAtoms()

|

virtual |

Implements PmeRealSpaceCompute.

Definition at line 601 of file CudaPmeSolverUtil.C.

References cudaCheck, and PmeRealSpaceCompute::numAtoms.

◆ gatherForce()

Implements PmeRealSpaceCompute.

Definition at line 766 of file CudaPmeSolverUtil.C.

References cudaCheck, PmeRealSpaceCompute::data, gather_force(), PmeGrid::K1, PmeGrid::K2, PmeGrid::K3, NAMD_EVENT_START, NAMD_EVENT_STOP, PmeRealSpaceCompute::numAtoms, PmeGrid::order, PmeRealSpaceCompute::pmeGrid, PmeRealSpaceCompute::xsize, PmeRealSpaceCompute::y0, PmeGrid::yBlocks, PmeRealSpaceCompute::ysize, PmeRealSpaceCompute::z0, PmeGrid::zBlocks, and PmeRealSpaceCompute::zsize.

◆ gatherForceSetCallback()

| void CudaPmeRealSpaceCompute::gatherForceSetCallback | ( | ComputePmeCUDADevice * | devicePtr_in | ) |

Definition at line 718 of file CudaPmeSolverUtil.C.

References CcdCallBacksReset(), and cudaCheck.

◆ spreadCharge()

|

virtual |

Implements PmeRealSpaceCompute.

Definition at line 615 of file CudaPmeSolverUtil.C.

References cudaCheck, PmeRealSpaceCompute::data, PmeGrid::K1, PmeGrid::K2, PmeGrid::K3, NAMD_EVENT_START, NAMD_EVENT_STOP, PmeRealSpaceCompute::numAtoms, PmeGrid::order, PmeRealSpaceCompute::pmeGrid, spread_charge(), PmeRealSpaceCompute::xsize, PmeRealSpaceCompute::y0, PmeGrid::yBlocks, PmeRealSpaceCompute::ysize, PmeRealSpaceCompute::z0, PmeGrid::zBlocks, and PmeRealSpaceCompute::zsize.

◆ waitGatherForceDone()

| void CudaPmeRealSpaceCompute::waitGatherForceDone | ( | ) |

The documentation for this class was generated from the following files:

1.8.14

1.8.14