#include <ComputeGlobal.h>

Public Member Functions | |

| ComputeGlobal (ComputeID, ComputeMgr *) | |

| virtual | ~ComputeGlobal () |

| void | doWork () |

| void | recvResults (ComputeGlobalResultsMsg *) |

| void | saveTotalForces (HomePatch *) |

| int | getForceSendActive () const |

Public Member Functions inherited from ComputeHomePatches Public Member Functions inherited from ComputeHomePatches | |

| ComputeHomePatches (ComputeID c) | |

| virtual | ~ComputeHomePatches () |

| virtual void | initialize () |

| virtual void | atomUpdate () |

| Flags * | getFlags (void) |

Public Member Functions inherited from Compute Public Member Functions inherited from Compute | |

| Compute (ComputeID) | |

| int | type () |

| virtual | ~Compute () |

| void | setNumPatches (int n) |

| int | getNumPatches () |

| virtual void | patchReady (PatchID, int doneMigration, int seq) |

| virtual int | noWork () |

| virtual void | finishPatch (int) |

| int | sequence (void) |

| int | priority (void) |

| int | getGBISPhase (void) |

| virtual void | gbisP2PatchReady (PatchID, int seq) |

| virtual void | gbisP3PatchReady (PatchID, int seq) |

Additional Inherited Members | |

Public Attributes inherited from Compute Public Attributes inherited from Compute | |

| const ComputeID | cid |

| LDObjHandle | ldObjHandle |

| LocalWorkMsg *const | localWorkMsg |

Protected Member Functions inherited from Compute Protected Member Functions inherited from Compute | |

| void | enqueueWork () |

Protected Attributes inherited from ComputeHomePatches Protected Attributes inherited from ComputeHomePatches | |

| int | useAvgPositions |

| int | hasPatchZero |

| ComputeHomePatchList | patchList |

| PatchMap * | patchMap |

Protected Attributes inherited from Compute Protected Attributes inherited from Compute | |

| int | computeType |

| int | basePriority |

| int | gbisPhase |

| int | gbisPhasePriority [3] |

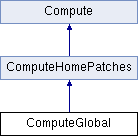

Detailed Description

Definition at line 35 of file ComputeGlobal.h.

Constructor & Destructor Documentation

◆ ComputeGlobal()

| ComputeGlobal::ComputeGlobal | ( | ComputeID | c, |

| ComputeMgr * | m | ||

| ) |

Definition at line 38 of file ComputeGlobal.C.

References SimParameters::colvarsOn, SimParameters::CUDASOAintegrateMode, DebugM, SimParameters::FMAOn, SimParameters::fullDirectOn, SimParameters::GBISOn, SimParameters::GBISserOn, SimParameters::IMDon, PatchMap::numPatches(), PatchMap::Object(), Node::Object(), ReductionMgr::Object(), SimParameters::PMEOn, REDUCTIONS_BASIC, REDUCTIONS_GPURESIDENT, ResizeArray< Elem >::resize(), Node::simParameters, SimParameters::tclForcesOn, and ReductionMgr::willSubmit().

◆ ~ComputeGlobal()

|

virtual |

Definition at line 115 of file ComputeGlobal.C.

Member Function Documentation

◆ doWork()

|

virtual |

Reimplemented from Compute.

Definition at line 519 of file ComputeGlobal.C.

References ResizeArray< Elem >::add(), ResizeArrayIter< T >::begin(), ComputeGlobalDataMsg::count, SimParameters::CUDASOAintegrate, DebugM, ComputeMgr::enableComputeGlobalResults(), ResizeArrayIter< T >::end(), endi(), SimParameters::globalMasterFrequency, ComputeHomePatches::hasPatchZero, ComputeGlobalDataMsg::lat, Node::Object(), ComputeGlobalDataMsg::patchcount, ComputeHomePatches::patchList, ComputeMgr::recvComputeGlobalResults(), ComputeMgr::sendComputeGlobalData(), Node::simParameters, and ComputeGlobalDataMsg::step.

◆ getForceSendActive()

|

inline |

Definition at line 46 of file ComputeGlobal.h.

◆ recvResults()

| void ComputeGlobal::recvResults | ( | ComputeGlobalResultsMsg * | msg | ) |

Definition at line 268 of file ComputeGlobal.C.

References ADD_TENSOR_OBJECT, ADD_VECTOR_OBJECT, ComputeGlobalResultsMsg::aid, ResizeArray< Elem >::begin(), ResizeArrayIter< T >::begin(), SimParameters::CUDASOAintegrate, DebugM, ResizeArray< Elem >::end(), ResizeArrayIter< T >::end(), ComputeGlobalResultsMsg::f, ComputeGlobalResultsMsg::gforce, SimParameters::globalMasterFrequency, SimParameters::globalMasterScaleByFrequency, Transform::i, LocalID::index, Transform::j, Transform::k, AtomMap::localID(), FullAtom::mass, NAMD_bug(), ComputeGlobalResultsMsg::newaid, ComputeGlobalResultsMsg::newgdef, ComputeGlobalResultsMsg::newgridobjid, Results::normal, notUsed, AtomMap::Object(), Node::Object(), outer(), ComputeHomePatches::patchList, LocalID::pid, CompAtom::position, ComputeGlobalResultsMsg::reconfig, ComputeGlobalResultsMsg::resendCoordinates, ResizeArray< Elem >::resize(), Lattice::reverse_transform(), Node::simParameters, ResizeArray< Elem >::size(), SubmitReduction::submit(), ComputeGlobalResultsMsg::totalforces, FullAtom::transform, Vector::x, Vector::y, and Vector::z.

Referenced by ComputeMgr::recvComputeGlobalResults().

◆ saveTotalForces()

| void ComputeGlobal::saveTotalForces | ( | HomePatch * | homePatch | ) |

Definition at line 973 of file ComputeGlobal.C.

References SimParameters::accelMDDebugOn, SimParameters::accelMDdihe, SimParameters::accelMDOn, ResizeArray< Elem >::add(), Results::amdf, ResizeArray< Elem >::begin(), SimParameters::CUDASOAintegrate, ResizeArray< Elem >::end(), Patch::f, PatchDataSOA::f_normal_x, PatchDataSOA::f_normal_y, PatchDataSOA::f_normal_z, PatchDataSOA::f_saved_nbond_x, PatchDataSOA::f_saved_nbond_y, PatchDataSOA::f_saved_nbond_z, PatchDataSOA::f_saved_slow_x, PatchDataSOA::f_saved_slow_y, PatchDataSOA::f_saved_slow_z, intpair::first, SimParameters::fixedAtomsOn, NAMD_bug(), Results::nbond, Results::normal, Patch::numAtoms, Node::Object(), Patch::patchID, ComputeHomePatches::patchList, intpair::second, Node::simParameters, Results::slow, Vector::x, Vector::y, and Vector::z.

Referenced by Sequencer::integrate(), Sequencer::integrate_SOA(), and Sequencer::minimize().

The documentation for this class was generated from the following files:

1.8.14

1.8.14