#include <ParallelIOMgr.h>

Public Attributes | |

| CthThread | sendAtomsThread |

| int | numAcksOutstanding |

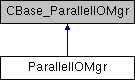

Detailed Description

Definition at line 155 of file ParallelIOMgr.h.

Constructor & Destructor Documentation

◆ ParallelIOMgr()

| ParallelIOMgr::ParallelIOMgr | ( | ) |

Definition at line 162 of file ParallelIOMgr.C.

◆ ~ParallelIOMgr()

| ParallelIOMgr::~ParallelIOMgr | ( | ) |

Definition at line 203 of file ParallelIOMgr.C.

Member Function Documentation

◆ ackAtomsToHomePatchProcs()

| void ParallelIOMgr::ackAtomsToHomePatchProcs | ( | ) |

Definition at line 1612 of file ParallelIOMgr.C.

◆ bcastHydroBasedCounter()

| void ParallelIOMgr::bcastHydroBasedCounter | ( | HydroBasedMsg * | msg | ) |

Definition at line 1320 of file ParallelIOMgr.C.

References endi(), iINFO(), iout, HydroBasedMsg::numFixedGroups, and HydroBasedMsg::numFixedRigidBonds.

◆ bcastMolInfo()

| void ParallelIOMgr::bcastMolInfo | ( | MolInfoMsg * | msg | ) |

Definition at line 1260 of file ParallelIOMgr.C.

References endi(), iINFO(), iout, MolInfoMsg::numAngles, MolInfoMsg::numBonds, MolInfoMsg::numCalcAngles, MolInfoMsg::numCalcBonds, MolInfoMsg::numCalcCrossterms, MolInfoMsg::numCalcDihedrals, MolInfoMsg::numCalcExclusions, MolInfoMsg::numCalcFullExclusions, MolInfoMsg::numCalcImpropers, MolInfoMsg::numCrossterms, MolInfoMsg::numDihedrals, MolInfoMsg::numExclusions, MolInfoMsg::numImpropers, MolInfoMsg::numRigidBonds, PDBVELFACTOR, MolInfoMsg::totalMass, and MolInfoMsg::totalMV.

◆ calcAtomsInEachPatch()

| void ParallelIOMgr::calcAtomsInEachPatch | ( | ) |

Definition at line 1404 of file ParallelIOMgr.C.

References PatchMap::assignToPatch(), AtomsCntPerPatchMsg::atomsCntList, AtomsCntPerPatchMsg::fixedAtomsCntList, PatchMap::getTmpPatchAtomsList(), PatchMap::initTmpPatchAtomsList(), InputAtom::isMP, InputAtom::isValid, AtomsCntPerPatchMsg::length, NAMD_die(), PatchMap::numPatches(), PatchMap::Object(), AtomsCntPerPatchMsg::pidList, and CompAtom::position.

◆ createHomePatches()

| void ParallelIOMgr::createHomePatches | ( | ) |

Definition at line 1685 of file ParallelIOMgr.C.

References PatchMgr::createHomePatch(), PatchMap::node(), PatchMap::numPatches(), PatchMap::numPatchesOnNode(), PatchMap::Object(), Node::Object(), and Node::workDistrib.

◆ disposeForces()

| void ParallelIOMgr::disposeForces | ( | int | seq, |

| double | prevT | ||

| ) |

Definition at line 1929 of file ParallelIOMgr.C.

◆ disposePositions()

| void ParallelIOMgr::disposePositions | ( | int | seq, |

| double | prevT | ||

| ) |

◆ disposeVelocities()

| void ParallelIOMgr::disposeVelocities | ( | int | seq, |

| double | prevT | ||

| ) |

Definition at line 1903 of file ParallelIOMgr.C.

◆ freeMolSpace()

| void ParallelIOMgr::freeMolSpace | ( | ) |

Definition at line 1731 of file ParallelIOMgr.C.

◆ getNumOutputProcs()

|

inline |

Definition at line 400 of file ParallelIOMgr.h.

◆ initialize()

| void ParallelIOMgr::initialize | ( | Node * | node | ) |

Definition at line 222 of file ParallelIOMgr.C.

References endi(), CollectionMgr::getMasterChareID(), iINFO(), iout, Node::molecule, NAMD_bug(), CollectionMgr::Object(), WorkDistrib::peCompactOrderingIndex, WorkDistrib::peDiffuseOrdering, and Node::simParameters.

◆ initializeDcdSelectionParams()

| void ParallelIOMgr::initializeDcdSelectionParams | ( | ) |

◆ integrateClusterSize()

| void ParallelIOMgr::integrateClusterSize | ( | ) |

Definition at line 790 of file ParallelIOMgr.C.

References ClusterSizeMsg::atomsCnt, ClusterSizeMsg::clusterId, and ClusterSizeMsg::srcRank.

◆ integrateMigratedAtoms()

| void ParallelIOMgr::integrateMigratedAtoms | ( | ) |

Definition at line 949 of file ParallelIOMgr.C.

References CompAtomExt::atomFixed, CompAtom::hydrogenGroupSize, InputAtom::isGP, InputAtom::isValid, HydroBasedMsg::numFixedGroups, HydroBasedMsg::numFixedRigidBonds, and FullAtom::rigidBondLength.

◆ isOutputProcessor()

| bool ParallelIOMgr::isOutputProcessor | ( | int | pe | ) |

Definition at line 358 of file ParallelIOMgr.C.

◆ migrateAtomsMGrp()

| void ParallelIOMgr::migrateAtomsMGrp | ( | ) |

Definition at line 889 of file ParallelIOMgr.C.

References ResizeArray< Elem >::add(), MoveInputAtomsMsg::atomList, ResizeArray< Elem >::begin(), ResizeArray< Elem >::clear(), MoveInputAtomsMsg::length, and ResizeArray< Elem >::size().

◆ readInfoForParOutDcdSelection()

| void ParallelIOMgr::readInfoForParOutDcdSelection | ( | ) |

Definition at line 738 of file ParallelIOMgr.C.

References Molecule::dcdSelectionParams, DebugM, IndexFile::getAllElements(), dcd_params::inputFilename, Node::molecule, Node::Object(), and dcd_params::size.

◆ readPerAtomInfo()

| void ParallelIOMgr::readPerAtomInfo | ( | ) |

Definition at line 368 of file ParallelIOMgr.C.

◆ receiveForces()

| void ParallelIOMgr::receiveForces | ( | CollectVectorVarMsg * | msg | ) |

Definition at line 1852 of file ParallelIOMgr.C.

References NAMD_bug(), and CollectVectorVarMsg::seq.

◆ receivePositions()

| void ParallelIOMgr::receivePositions | ( | CollectVectorVarMsg * | msg | ) |

Definition at line 1806 of file ParallelIOMgr.C.

References NAMD_bug(), and CollectVectorVarMsg::seq.

◆ receiveVelocities()

| void ParallelIOMgr::receiveVelocities | ( | CollectVectorVarMsg * | msg | ) |

Definition at line 1829 of file ParallelIOMgr.C.

References NAMD_bug(), and CollectVectorVarMsg::seq.

◆ recvAtomsCntPerPatch()

| void ParallelIOMgr::recvAtomsCntPerPatch | ( | AtomsCntPerPatchMsg * | msg | ) |

Definition at line 1482 of file ParallelIOMgr.C.

References AtomsCntPerPatchMsg::atomsCntList, endi(), AtomsCntPerPatchMsg::fixedAtomsCntList, iINFO(), iout, AtomsCntPerPatchMsg::length, NAMD_die(), PatchMap::numPatches(), PatchMap::Object(), Node::Object(), and AtomsCntPerPatchMsg::pidList.

◆ recvAtomsMGrp()

| void ParallelIOMgr::recvAtomsMGrp | ( | MoveInputAtomsMsg * | msg | ) |

Definition at line 941 of file ParallelIOMgr.C.

References MoveInputAtomsMsg::atomList, and MoveInputAtomsMsg::length.

◆ recvAtomsToHomePatchProcs()

| void ParallelIOMgr::recvAtomsToHomePatchProcs | ( | MovePatchAtomsMsg * | msg | ) |

Definition at line 1621 of file ParallelIOMgr.C.

References MovePatchAtomsMsg::allAtoms, MovePatchAtomsMsg::from, MovePatchAtomsMsg::patchCnt, MovePatchAtomsMsg::pidList, and MovePatchAtomsMsg::sizeList.

◆ recvClusterCoor()

| void ParallelIOMgr::recvClusterCoor | ( | ClusterCoorMsg * | msg | ) |

Definition at line 2030 of file ParallelIOMgr.C.

◆ recvClusterSize()

| void ParallelIOMgr::recvClusterSize | ( | ClusterSizeMsg * | msg | ) |

Definition at line 782 of file ParallelIOMgr.C.

◆ recvDcdParams()

| void ParallelIOMgr::recvDcdParams | ( | std::vector< uint16 > | tags, |

| std::vector< std::string > | inputFileNames, | ||

| std::vector< std::string > | outputFileNames, | ||

| std::vector< int > | freqs, | ||

| std::vector< OUTPUTFILETYPE > | types | ||

| ) |

Definition at line 712 of file ParallelIOMgr.C.

References Molecule::dcdSelectionAtoms, Molecule::dcdSelectionParams, dcd_params::frequency, dcd_params::inputFilename, Node::molecule, NAMD_FILENAME_BUFFER_SIZE, Node::Object(), dcd_params::outFilename, dcd_params::tag, and dcd_params::type.

◆ recvFinalClusterCoor()

| void ParallelIOMgr::recvFinalClusterCoor | ( | ClusterCoorMsg * | msg | ) |

Definition at line 2102 of file ParallelIOMgr.C.

References ClusterCoorElem::clusterId, ClusterCoorMsg::clusterId, ClusterCoorElem::dsum, ClusterCoorMsg::dsum, ResizeArray< Elem >::size(), Lattice::wrap_delta(), and Lattice::wrap_nearest_delta().

◆ recvFinalClusterSize()

| void ParallelIOMgr::recvFinalClusterSize | ( | ClusterSizeMsg * | msg | ) |

Definition at line 842 of file ParallelIOMgr.C.

References ClusterElem::atomsCnt, ClusterSizeMsg::atomsCnt, ClusterElem::clusterId, and ClusterSizeMsg::clusterId.

◆ recvHydroBasedCounter()

| void ParallelIOMgr::recvHydroBasedCounter | ( | HydroBasedMsg * | msg | ) |

Definition at line 1307 of file ParallelIOMgr.C.

References HydroBasedMsg::numFixedGroups, and HydroBasedMsg::numFixedRigidBonds.

◆ recvMolInfo()

| void ParallelIOMgr::recvMolInfo | ( | MolInfoMsg * | msg | ) |

Definition at line 1204 of file ParallelIOMgr.C.

References MolInfoMsg::numAngles, MolInfoMsg::numBonds, MolInfoMsg::numCalcAngles, MolInfoMsg::numCalcBonds, MolInfoMsg::numCalcCrossterms, MolInfoMsg::numCalcDihedrals, MolInfoMsg::numCalcExclusions, MolInfoMsg::numCalcFullExclusions, MolInfoMsg::numCalcImpropers, MolInfoMsg::numCrossterms, MolInfoMsg::numDihedrals, MolInfoMsg::numExclusions, MolInfoMsg::numImpropers, MolInfoMsg::numRigidBonds, MolInfoMsg::totalCharge, MolInfoMsg::totalMass, and MolInfoMsg::totalMV.

◆ sendAtomsToHomePatchProcs()

| void ParallelIOMgr::sendAtomsToHomePatchProcs | ( | ) |

Definition at line 1532 of file ParallelIOMgr.C.

References ResizeArray< Elem >::add(), MovePatchAtomsMsg::allAtoms, ResizeArray< Elem >::begin(), call_sendAtomsToHomePatchProcs(), ResizeArray< Elem >::clear(), PatchMap::delTmpPatchAtomsList(), MovePatchAtomsMsg::from, PatchMap::getTmpPatchAtomsList(), PatchMap::node(), PatchMap::numPatches(), PatchMap::Object(), MovePatchAtomsMsg::patchCnt, MovePatchAtomsMsg::pidList, Random::reorder(), ResizeArray< Elem >::size(), and MovePatchAtomsMsg::sizeList.

◆ sendDcdParams()

| void ParallelIOMgr::sendDcdParams | ( | ) |

Definition at line 679 of file ParallelIOMgr.C.

References Molecule::dcdSelectionAtoms, Molecule::dcdSelectionParams, dcd_params::frequency, dcd_params::inputFilename, Node::molecule, Node::Object(), dcd_params::outFilename, dcd_params::tag, and dcd_params::type.

◆ updateMolInfo()

| void ParallelIOMgr::updateMolInfo | ( | ) |

Definition at line 1016 of file ParallelIOMgr.C.

References AtomSignature::angleCnt, AtomSignature::angleSigs, atomSigPool, AtomSignature::bondCnt, AtomSignature::bondSigs, AtomSignature::crosstermCnt, AtomSignature::crosstermSigs, AtomSignature::dihedralCnt, AtomSignature::dihedralSigs, ExclusionSignature::fullExclCnt, ExclusionSignature::fullOffset, AtomSignature::gromacsPairCnt, AtomSignature::gromacsPairSigs, AtomSignature::improperCnt, AtomSignature::improperSigs, ExclusionSignature::modExclCnt, ExclusionSignature::modOffset, MolInfoMsg::numAngles, MolInfoMsg::numBonds, MolInfoMsg::numCalcAngles, MolInfoMsg::numCalcBonds, MolInfoMsg::numCalcCrossterms, MolInfoMsg::numCalcDihedrals, MolInfoMsg::numCalcExclusions, MolInfoMsg::numCalcFullExclusions, MolInfoMsg::numCalcImpropers, MolInfoMsg::numCalcLJPairs, MolInfoMsg::numCrossterms, MolInfoMsg::numDihedrals, MolInfoMsg::numExclusions, MolInfoMsg::numImpropers, MolInfoMsg::numLJPairs, MolInfoMsg::numRigidBonds, TupleSignature::offset, MolInfoMsg::totalCharge, MolInfoMsg::totalMass, and MolInfoMsg::totalMV.

◆ wrapCoor()

| void ParallelIOMgr::wrapCoor | ( | int | seq, |

| Lattice | lat | ||

| ) |

Definition at line 1956 of file ParallelIOMgr.C.

References ResizeArray< Elem >::add(), UniqueSetIter< T >::begin(), ClusterCoorElem::clusterId, ClusterCoorMsg::clusterId, ClusterCoorElem::dsum, ClusterCoorMsg::dsum, UniqueSetIter< T >::end(), ResizeArray< Elem >::size(), and ClusterCoorMsg::srcRank.

Member Data Documentation

◆ numAcksOutstanding

| int ParallelIOMgr::numAcksOutstanding |

Definition at line 386 of file ParallelIOMgr.h.

◆ sendAtomsThread

| CthThread ParallelIOMgr::sendAtomsThread |

Definition at line 384 of file ParallelIOMgr.h.

The documentation for this class was generated from the following files:

1.8.14

1.8.14