#include <ComputeNonbondedCUDA.h>

Classes | |

| struct | compute_record |

| struct | patch_record |

Public Member Functions | |

| ComputeNonbondedCUDA (ComputeID c, ComputeMgr *mgr, ComputeNonbondedCUDA *m=0, int idx=-1) | |

| ~ComputeNonbondedCUDA () | |

| void | atomUpdate () |

| void | doWork () |

| int | noWork () |

| void | skip () |

| void | recvYieldDevice (int pe) |

| int | finishWork () |

| void | finishReductions () |

| void | finishPatch (int) |

| void | messageFinishPatch (int) |

| void | requirePatch (int pid) |

| void | assignPatches () |

| void | registerPatches () |

Public Member Functions inherited from Compute Public Member Functions inherited from Compute | |

| Compute (ComputeID) | |

| int | type () |

| virtual | ~Compute () |

| void | setNumPatches (int n) |

| int | getNumPatches () |

| virtual void | initialize () |

| virtual void | patchReady (PatchID, int doneMigration, int seq) |

| int | sequence (void) |

| int | priority (void) |

| int | getGBISPhase (void) |

| virtual void | gbisP2PatchReady (PatchID, int seq) |

| virtual void | gbisP3PatchReady (PatchID, int seq) |

Static Public Member Functions | |

| static void | build_lj_table () |

| static void | build_force_table () |

| static void | build_exclusions () |

Additional Inherited Members | |

Protected Member Functions inherited from Compute Protected Member Functions inherited from Compute | |

| void | enqueueWork () |

Protected Attributes inherited from Compute Protected Attributes inherited from Compute | |

| int | computeType |

| int | basePriority |

| int | gbisPhase |

| int | gbisPhasePriority [3] |

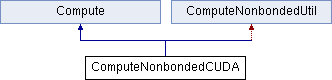

Detailed Description

Definition at line 25 of file ComputeNonbondedCUDA.h.

Constructor & Destructor Documentation

| ComputeNonbondedCUDA::ComputeNonbondedCUDA | ( | ComputeID | c, |

| ComputeMgr * | mgr, | ||

| ComputeNonbondedCUDA * | m = 0, |

||

| int | idx = -1 |

||

| ) |

Definition at line 437 of file ComputeNonbondedCUDA.C.

References atomMap, atoms, atoms_size, atomsChanged, Compute::basePriority, build_exclusions(), computeMgr, computesChanged, cuda_errcheck(), cuda_init(), cudaCompute, dEdaSumH, dEdaSumH_size, deviceCUDA, deviceID, end_local_download, end_remote_download, forces, forces_size, SimParameters::GBISOn, DeviceCUDA::getDeviceID(), DeviceCUDA::getMergeGrids(), DeviceCUDA::getNoMergeGrids(), DeviceCUDA::getNoStreaming(), DeviceCUDA::getSharedGpu(), init_arrays(), localWorkMsg2, master, masterPe, max_grid_size, NAMD_bug(), NAMD_die(), PatchMap::numPatches(), PatchMap::Object(), AtomMap::Object(), Node::Object(), pairlistsValid, pairlistTolerance, patch_pair_num_ptr, patch_pairs_ptr, patchMap, patchPairsReordered, patchRecords, plcutoff2, SimParameters::PMEOffload, SimParameters::PMEOn, SimParameters::pressureProfileOn, PRIORITY_SIZE, PROXY_DATA_PRIORITY, psiSumH, psiSumH_size, reduction, registerPatches(), savePairlists, DeviceCUDA::setGpuIsMine(), DeviceCUDA::setMergeGrids(), Node::simParameters, slaveIndex, slavePes, slaves, slow_forces, slow_forces_size, start_calc, stream, stream2, usePairlists, SimParameters::usePMECUDA, and workStarted.

| ComputeNonbondedCUDA::~ComputeNonbondedCUDA | ( | ) |

Definition at line 577 of file ComputeNonbondedCUDA.C.

Member Function Documentation

| void ComputeNonbondedCUDA::assignPatches | ( | ) |

Definition at line 661 of file ComputeNonbondedCUDA.C.

References activePatches, ResizeArray< T >::add(), computeMgr, deviceCUDA, DeviceCUDA::getNumPesSharingDevice(), DeviceCUDA::getPesSharingDevice(), ComputeNonbondedCUDA::patch_record::hostPe, PatchMap::node(), numSlaves, ReductionMgr::Object(), PatchMap::ObjectOnPe(), patchMap, patchRecords, reduction, REDUCTIONS_BASIC, registerPatches(), ComputeMgr::sendCreateNonbondedCUDASlave(), ResizeArray< T >::size(), slavePes, slaves, sort, and ReductionMgr::willSubmit().

Referenced by ComputeMgr::createComputes().

Reimplemented from Compute.

Definition at line 1042 of file ComputeNonbondedCUDA.C.

References atomsChanged.

|

static |

Definition at line 259 of file ComputeNonbondedCUDA.C.

References ResizeArray< Elem >::add(), ResizeArray< T >::add(), ResizeArray< T >::begin(), cuda_bind_exclusions(), ResizeArray< T >::end(), exclusionsByAtom, ExclusionSignature::fullExclCnt, ExclusionSignature::fullOffset, Molecule::get_full_exclusions_for_atom(), ObjectArena< Type >::getNewArray(), MAX_EXCLUSIONS, ComputeNonbondedUtil::mol, Node::molecule, NAMD_bug(), NAMD_die(), Molecule::numAtoms, Node::Object(), ResizeArray< Elem >::resize(), SET_EXCL, ResizeArray< T >::size(), and SortableResizeArray< Type >::sort().

Referenced by build_cuda_exclusions(), and ComputeNonbondedCUDA().

|

static |

Definition at line 114 of file ComputeNonbondedCUDA.C.

References cuda_bind_force_table(), ComputeNonbondedUtil::cutoff, ComputeNonbondedUtil::fast_table, FORCE_TABLE_SIZE, ComputeNonbondedUtil::r2_delta, ComputeNonbondedUtil::r2_delta_exp, ComputeNonbondedUtil::r2_table, ComputeNonbondedUtil::scor_table, ComputeNonbondedUtil::vdwa_table, and ComputeNonbondedUtil::vdwb_table.

Referenced by build_cuda_force_table().

|

static |

Definition at line 87 of file ComputeNonbondedCUDA.C.

References LJTable::TableEntry::A, LJTable::TableEntry::B, cuda_bind_lj_table(), LJTable::get_table_dim(), ComputeNonbondedUtil::ljTable, NAMD_bug(), ComputeNonbondedUtil::scaling, and LJTable::table_val().

Referenced by build_cuda_force_table().

Reimplemented from Compute.

Definition at line 1176 of file ComputeNonbondedCUDA.C.

References Lattice::a(), activePatches, atom_params, atom_params_size, atoms, atoms_size, atomsChanged, Lattice::b(), Compute::basePriority, ResizeArray< T >::begin(), block_order, block_order_size, ComputeNonbondedCUDA::patch_record::bornRad, bornRadH, bornRadH_size, Lattice::c(), PatchMap::center(), CompAtom::charge, COMPUTE_PROXY_PRIORITY, computeRecords, computesChanged, COULOMB, cuda_bind_patch_pairs(), cuda_check_local_progress(), cuda_errcheck(), cudaCheck, cudaCompute, ComputeNonbondedUtil::cutoff, ComputeNonbondedUtil::cutoff2, dEdaSumH, dEdaSumH_size, deviceCUDA, deviceID, ComputeNonbondedCUDA::patch_record::dHdrPrefix, dHdrPrefixH, dHdrPrefixH_size, ComputeNonbondedUtil::dielectric_1, doSlow, dummy_dev, dummy_size, energy_gbis, energy_gbis_size, exclusionsByAtom, finishWork(), ComputeNonbondedUtil::fixedAtomsOn, Patch::flags, force_ready_queue, force_ready_queue_len, force_ready_queue_size, forces, forces_size, SimParameters::GBISOn, GBISP, Compute::gbisPhase, Patch::getCudaAtomList(), DeviceCUDA::getGpuIsMine(), DeviceCUDA::getNoStreaming(), Patch::getNumAtoms(), hostedPatches, CompAtomExt::id, ComputeNonbondedCUDA::patch_record::intRad, intRad0H, intRad0H_size, intRadSH, intRadSH_size, SubmitReduction::item(), kernel_time, lata, latb, latc, Flags::lattice, lattice, localActivePatches, localComputeRecords, ComputeNonbondedCUDA::patch_record::localStart, master, Flags::maxAtomMovement, ComputeNonbondedUtil::mol, Node::molecule, NAMD_bug(), num_atoms, num_local_atoms, num_remote_atoms, num_virials, ComputeNonbondedCUDA::patch_record::numAtoms, ComputeNonbondedCUDA::patch_record::numFreeAtoms, Node::Object(), ComputeNonbondedCUDA::compute_record::offset, ComputeNonbondedCUDA::patch_record::p, pairlistsValid, Flags::pairlistTolerance, pairlistTolerance, Node::parameters, patch_pair_num_ptr, patch_pairs, patch_pairs_ptr, ComputeNonbondedCUDA::patch_record::patchID, patchMap, patchPairsReordered, patchRecords, ComputeNonbondedCUDA::compute_record::pid, plcutoff2, CompAtom::position, PROXY_DATA_PRIORITY, PROXY_RESULTS_PRIORITY, psiSumH, psiSumH_size, recvYieldDevice(), reduction, REDUCTION_PAIRLIST_WARNINGS, remoteActivePatches, remoteComputeRecords, ResizeArray< T >::resize(), Flags::savePairlists, savePairlists, ComputeNonbondedUtil::scaling, Compute::sequence(), Node::simParameters, simParams, ResizeArray< T >::size(), slow_forces, slow_forces_size, slow_virials, CompAtomExt::sortOrder, ResizeArray< T >::swap(), Lattice::unscale(), Flags::usePairlists, usePairlists, vdw_types, vdw_types_size, CompAtom::vdwType, virials, virials_size, WARPSIZE, workStarted, ComputeNonbondedCUDA::patch_record::x, Vector::x, CudaAtom::x, ComputeNonbondedCUDA::patch_record::xExt, Vector::y, and Vector::z.

|

virtual |

Reimplemented from Compute.

Definition at line 1868 of file ComputeNonbondedCUDA.C.

References activePatches, Box< Owner, Data >::close(), ComputeNonbondedCUDA::patch_record::forceBox, master, Box< Owner, Data >::open(), patchRecords, ComputeNonbondedCUDA::patch_record::positionBox, ComputeNonbondedCUDA::patch_record::r, and ComputeNonbondedCUDA::patch_record::x.

Referenced by finishWork().

| void ComputeNonbondedCUDA::finishReductions | ( | ) |

Definition at line 2032 of file ComputeNonbondedCUDA.C.

References ADD_TENSOR_OBJECT, Compute::basePriority, block_order, computeRecords, cuda_errcheck(), cuda_timer_count, cuda_timer_total, doEnergy, doSlow, end_local_download, end_remote_download, endi(), energy_gbis, SimParameters::GBISOn, iout, SubmitReduction::item(), localComputeRecords, num_virials, Node::Object(), SimParameters::outputCudaTiming, patchPairsReordered, patchRecords, PROXY_DATA_PRIORITY, reduction, REDUCTION_ELECT_ENERGY, REDUCTION_ELECT_ENERGY_SLOW, REDUCTION_EXCLUSION_CHECKSUM_CUDA, REDUCTION_LJ_ENERGY, remoteComputeRecords, Node::simParameters, simParams, slow_virials, start_calc, step, SubmitReduction::submit(), virials, Tensor::xx, Tensor::xy, Tensor::xz, Tensor::yx, Tensor::yy, Tensor::yz, Tensor::zx, Tensor::zy, and Tensor::zz.

Referenced by finishWork().

| int ComputeNonbondedCUDA::finishWork | ( | ) |

Definition at line 1906 of file ComputeNonbondedCUDA.C.

References atomsChanged, ComputeNonbondedCUDA::patch_record::bornRad, ComputeNonbondedCUDA::patch_record::bornRadBox, Box< Owner, Data >::close(), computeMgr, cuda_timer_total, ComputeNonbondedCUDA::patch_record::dEdaSum, ComputeNonbondedCUDA::patch_record::dEdaSumBox, dEdaSumH, deviceCUDA, ComputeNonbondedCUDA::patch_record::dHdrPrefix, ComputeNonbondedCUDA::patch_record::dHdrPrefixBox, doSlow, finishPatch(), finishReductions(), ComputeNonbondedCUDA::patch_record::forceBox, SimParameters::GBISOn, GBISP, Compute::gbisPhase, DeviceCUDA::getMergeGrids(), ComputeNonbondedCUDA::patch_record::intRad, ComputeNonbondedCUDA::patch_record::intRadBox, SubmitReduction::item(), kernel_time, localHostedPatches, ComputeNonbondedCUDA::patch_record::localStart, master, ComputeNonbondedUtil::mol, Node::molecule, ComputeNonbondedCUDA::patch_record::numAtoms, numSlaves, Node::Object(), Box< Owner, Data >::open(), ComputeNonbondedCUDA::patch_record::patchID, patchRecords, CompAtom::position, ComputeNonbondedCUDA::patch_record::positionBox, Compute::priority(), ComputeNonbondedCUDA::patch_record::psiSum, ComputeNonbondedCUDA::patch_record::psiSumBox, psiSumH, ComputeNonbondedCUDA::patch_record::r, reduction, remoteHostedPatches, ComputeMgr::sendNonbondedCUDASlaveEnqueue(), Compute::sequence(), Node::simParameters, simParams, ResizeArray< T >::size(), slavePes, slaves, CompAtomExt::sortOrder, workStarted, ComputeNonbondedCUDA::patch_record::x, Vector::x, ComputeNonbondedCUDA::patch_record::xExt, Vector::y, and Vector::z.

Referenced by doWork().

| void ComputeNonbondedCUDA::messageFinishPatch | ( | int | flindex | ) |

Definition at line 1861 of file ComputeNonbondedCUDA.C.

References activePatches, computeMgr, ComputeNonbondedCUDA::patch_record::hostPe, ComputeNonbondedCUDA::patch_record::msg, patchRecords, PROXY_DATA_PRIORITY, ComputeMgr::sendNonbondedCUDASlaveEnqueuePatch(), Compute::sequence(), and ComputeNonbondedCUDA::patch_record::slave.

|

virtual |

Reimplemented from Compute.

Definition at line 1112 of file ComputeNonbondedCUDA.C.

References atomsChanged, ComputeNonbondedCUDA::patch_record::bornRad, ComputeNonbondedCUDA::patch_record::bornRadBox, computeMgr, ComputeNonbondedCUDA::patch_record::dEdaSum, ComputeNonbondedCUDA::patch_record::dEdaSumBox, ComputeNonbondedCUDA::patch_record::dHdrPrefix, ComputeNonbondedCUDA::patch_record::dHdrPrefixBox, Flags::doEnergy, doEnergy, Flags::doFullElectrostatics, Flags::doNonbonded, doSlow, SimParameters::GBISOn, GBISP, Compute::gbisPhase, Patch::getCompAtomExtInfo(), hostedPatches, ComputeNonbondedCUDA::patch_record::intRad, ComputeNonbondedCUDA::patch_record::intRadBox, Flags::lattice, lattice, master, masterPe, numSlaves, Node::Object(), Box< Owner, Data >::open(), ComputeNonbondedCUDA::patch_record::p, ComputeNonbondedCUDA::patch_record::patchID, patchRecords, ComputeNonbondedCUDA::patch_record::positionBox, ComputeNonbondedCUDA::patch_record::psiSum, ComputeNonbondedCUDA::patch_record::psiSumBox, reduction, ComputeMgr::sendNonbondedCUDASlaveReady(), ComputeMgr::sendNonbondedCUDASlaveSkip(), Compute::sequence(), Node::simParameters, simParams, ResizeArray< T >::size(), skip(), slavePes, slaves, Flags::step, step, SubmitReduction::submit(), workStarted, ComputeNonbondedCUDA::patch_record::x, and ComputeNonbondedCUDA::patch_record::xExt.

| void ComputeNonbondedCUDA::recvYieldDevice | ( | int | pe | ) |

Definition at line 1637 of file ComputeNonbondedCUDA.C.

References Lattice::a(), SimParameters::alpha_cutoff, atom_params, atoms, atomsChanged, Lattice::b(), block_order, bornRadH, Lattice::c(), CcdCallBacksReset(), SimParameters::coulomb_radius_offset, cuda_bind_atom_params(), cuda_bind_atoms(), cuda_bind_forces(), cuda_bind_GBIS_bornRad(), cuda_bind_GBIS_dEdaSum(), cuda_bind_GBIS_dHdrPrefix(), cuda_bind_GBIS_energy(), cuda_bind_GBIS_intRad(), cuda_bind_GBIS_psiSum(), cuda_bind_vdw_types(), cuda_bind_virials(), cuda_check_local_calc(), cuda_check_local_progress(), cuda_check_progress(), cuda_check_remote_calc(), cuda_check_remote_progress(), cuda_GBIS_P1(), cuda_GBIS_P2(), cuda_GBIS_P3(), cuda_nonbonded_forces(), CUDA_POLL, SimParameters::cutoff, ComputeNonbondedUtil::cutoff2, dEdaSumH, deviceCUDA, deviceID, dHdrPrefixH, SimParameters::dielectric, doEnergy, doSlow, dummy_dev, dummy_size, end_local_download, end_remote_download, energy_gbis, force_ready_queue, force_ready_queue_next, forces, SimParameters::fsMax, SimParameters::GBISOn, GBISP, Compute::gbisPhase, DeviceCUDA::getMergeGrids(), DeviceCUDA::getNoStreaming(), DeviceCUDA::getSharedGpu(), intRad0H, intRadSH, SimParameters::kappa, kernel_launch_state, lata, latb, latc, lattice, localActivePatches, localComputeRecords, SimParameters::nonbondedScaling, Node::Object(), patchPairsReordered, plcutoff2, SimParameters::PMEOffload, SimParameters::PMEOn, psiSumH, remote_submit_time, remoteActivePatches, remoteComputeRecords, savePairlists, Compute::sequence(), DeviceCUDA::setGpuIsMine(), Node::simParameters, simParams, ResizeArray< T >::size(), slow_forces, SimParameters::solvent_dielectric, start_calc, stream, stream2, SimParameters::switchingActive, SimParameters::switchingDist, usePairlists, SimParameters::usePMECUDA, vdw_types, virials, workStarted, Vector::x, Vector::y, and Vector::z.

Referenced by doWork(), and ComputeMgr::recvYieldDevice().

| void ComputeNonbondedCUDA::registerPatches | ( | ) |

Definition at line 615 of file ComputeNonbondedCUDA.C.

References activePatches, ResizeArray< T >::add(), ResizeArray< T >::begin(), ComputeNonbondedCUDA::patch_record::bornRadBox, ProxyMgr::createProxy(), ComputeNonbondedCUDA::patch_record::dEdaSumBox, ComputeNonbondedCUDA::patch_record::dHdrPrefixBox, ComputeNonbondedCUDA::patch_record::forceBox, SimParameters::GBISOn, hostedPatches, ComputeNonbondedCUDA::patch_record::hostPe, ComputeNonbondedCUDA::patch_record::intRadBox, ComputeNonbondedCUDA::patch_record::isLocal, localHostedPatches, master, masterPe, ComputeNonbondedCUDA::patch_record::msg, Node::Object(), ProxyMgr::Object(), ComputeNonbondedCUDA::patch_record::p, PatchMap::patch(), patchMap, patchRecords, ComputeNonbondedCUDA::patch_record::positionBox, PRIORITY_SIZE, ComputeNonbondedCUDA::patch_record::psiSumBox, Patch::registerBornRadPickup(), Patch::registerDEdaSumDeposit(), Patch::registerDHdrPrefixPickup(), Patch::registerForceDeposit(), Patch::registerIntRadPickup(), Patch::registerPositionPickup(), Patch::registerPsiSumDeposit(), remoteHostedPatches, Compute::setNumPatches(), Node::simParameters, simParams, ResizeArray< T >::size(), and ComputeNonbondedCUDA::patch_record::slave.

Referenced by assignPatches(), and ComputeNonbondedCUDA().

| void ComputeNonbondedCUDA::requirePatch | ( | int | pid | ) |

Definition at line 579 of file ComputeNonbondedCUDA.C.

References activePatches, ResizeArray< T >::add(), ComputeNonbondedCUDA::patch_record::bornRad, computesChanged, ComputeNonbondedCUDA::patch_record::dEdaSum, deviceCUDA, ComputeNonbondedCUDA::patch_record::dHdrPrefix, ComputeNonbondedCUDA::patch_record::f, DeviceCUDA::getMergeGrids(), ComputeNonbondedCUDA::patch_record::hostPe, PatchMap::index_a(), PatchMap::index_b(), PatchMap::index_c(), ComputeNonbondedCUDA::patch_record::intRad, ComputeNonbondedCUDA::patch_record::isLocal, ComputeNonbondedCUDA::patch_record::isSameNode, ComputeNonbondedCUDA::patch_record::isSamePhysicalNode, localActivePatches, PatchMap::node(), ComputeNonbondedCUDA::patch_record::patchID, patchMap, patchRecords, ComputeNonbondedCUDA::patch_record::psiSum, ComputeNonbondedCUDA::patch_record::r, ComputeNonbondedCUDA::patch_record::refCount, remoteActivePatches, ComputeNonbondedCUDA::patch_record::x, and ComputeNonbondedCUDA::patch_record::xExt.

Referenced by register_cuda_compute_pair(), and register_cuda_compute_self().

Definition at line 1095 of file ComputeNonbondedCUDA.C.

References ComputeNonbondedCUDA::patch_record::bornRadBox, ComputeNonbondedCUDA::patch_record::dEdaSumBox, ComputeNonbondedCUDA::patch_record::dHdrPrefixBox, ComputeNonbondedCUDA::patch_record::forceBox, SimParameters::GBISOn, hostedPatches, ComputeNonbondedCUDA::patch_record::intRadBox, master, Node::Object(), patchRecords, ComputeNonbondedCUDA::patch_record::positionBox, ComputeNonbondedCUDA::patch_record::psiSumBox, Node::simParameters, simParams, ResizeArray< T >::size(), and Box< Owner, Data >::skip().

Referenced by noWork(), and ComputeMgr::recvNonbondedCUDASlaveSkip().

Member Data Documentation

| ResizeArray<int> ComputeNonbondedCUDA::activePatches |

Definition at line 100 of file ComputeNonbondedCUDA.h.

Referenced by assignPatches(), doWork(), finishPatch(), messageFinishPatch(), registerPatches(), and requirePatch().

| AtomMap* ComputeNonbondedCUDA::atomMap |

Definition at line 124 of file ComputeNonbondedCUDA.h.

Referenced by ComputeNonbondedCUDA().

| CudaAtom* ComputeNonbondedCUDA::atoms |

Definition at line 147 of file ComputeNonbondedCUDA.h.

Referenced by ComputeNonbondedCUDA(), doWork(), and recvYieldDevice().

| int ComputeNonbondedCUDA::atoms_size |

Definition at line 146 of file ComputeNonbondedCUDA.h.

Referenced by ComputeNonbondedCUDA(), and doWork().

| int ComputeNonbondedCUDA::atomsChanged |

Definition at line 136 of file ComputeNonbondedCUDA.h.

Referenced by atomUpdate(), ComputeNonbondedCUDA(), doWork(), finishWork(), noWork(), and recvYieldDevice().

| ResizeArray<compute_record> ComputeNonbondedCUDA::computeRecords |

Definition at line 103 of file ComputeNonbondedCUDA.h.

Referenced by doWork(), and finishReductions().

| int ComputeNonbondedCUDA::computesChanged |

Definition at line 137 of file ComputeNonbondedCUDA.h.

Referenced by ComputeNonbondedCUDA(), doWork(), and requirePatch().

| GBReal* ComputeNonbondedCUDA::dEdaSumH |

Definition at line 116 of file ComputeNonbondedCUDA.h.

Referenced by ComputeNonbondedCUDA(), doWork(), finishWork(), and recvYieldDevice().

| int ComputeNonbondedCUDA::dEdaSumH_size |

Definition at line 115 of file ComputeNonbondedCUDA.h.

Referenced by ComputeNonbondedCUDA(), and doWork().

| int ComputeNonbondedCUDA::deviceID |

Definition at line 121 of file ComputeNonbondedCUDA.h.

Referenced by ComputeNonbondedCUDA(), doWork(), and recvYieldDevice().

| int ComputeNonbondedCUDA::doEnergy |

Definition at line 85 of file ComputeNonbondedCUDA.h.

Referenced by finishReductions(), noWork(), and recvYieldDevice().

| int ComputeNonbondedCUDA::doSlow |

Definition at line 85 of file ComputeNonbondedCUDA.h.

Referenced by doWork(), finishReductions(), finishWork(), noWork(), and recvYieldDevice().

| float4* ComputeNonbondedCUDA::forces |

Definition at line 107 of file ComputeNonbondedCUDA.h.

Referenced by ComputeNonbondedCUDA(), doWork(), and recvYieldDevice().

| int ComputeNonbondedCUDA::forces_size |

Definition at line 106 of file ComputeNonbondedCUDA.h.

Referenced by ComputeNonbondedCUDA(), and doWork().

| ResizeArray<int> ComputeNonbondedCUDA::hostedPatches |

Definition at line 101 of file ComputeNonbondedCUDA.h.

Referenced by doWork(), noWork(), registerPatches(), and skip().

| Lattice ComputeNonbondedCUDA::lattice |

Definition at line 84 of file ComputeNonbondedCUDA.h.

Referenced by doWork(), noWork(), and recvYieldDevice().

| ResizeArray<int> ComputeNonbondedCUDA::localActivePatches |

Definition at line 100 of file ComputeNonbondedCUDA.h.

Referenced by doWork(), recvYieldDevice(), and requirePatch().

| ResizeArray<compute_record> ComputeNonbondedCUDA::localComputeRecords |

Definition at line 104 of file ComputeNonbondedCUDA.h.

Referenced by doWork(), finishReductions(), recvYieldDevice(), register_cuda_compute_pair(), and register_cuda_compute_self().

| ResizeArray<int> ComputeNonbondedCUDA::localHostedPatches |

Definition at line 101 of file ComputeNonbondedCUDA.h.

Referenced by finishWork(), registerPatches(), and ComputeMgr::sendNonbondedCUDASlaveEnqueue().

| LocalWorkMsg* ComputeNonbondedCUDA::localWorkMsg2 |

Definition at line 81 of file ComputeNonbondedCUDA.h.

Referenced by ComputeNonbondedCUDA(), and ComputeMgr::sendNonbondedCUDASlaveEnqueue().

| ComputeNonbondedCUDA* ComputeNonbondedCUDA::master |

Definition at line 129 of file ComputeNonbondedCUDA.h.

Referenced by ComputeNonbondedCUDA(), doWork(), finishPatch(), finishWork(), noWork(), registerPatches(), and skip().

| int ComputeNonbondedCUDA::masterPe |

Definition at line 130 of file ComputeNonbondedCUDA.h.

Referenced by ComputeNonbondedCUDA(), noWork(), and registerPatches().

| int ComputeNonbondedCUDA::numSlaves |

Definition at line 134 of file ComputeNonbondedCUDA.h.

Referenced by assignPatches(), finishWork(), and noWork().

| int ComputeNonbondedCUDA::pairlistsValid |

Definition at line 140 of file ComputeNonbondedCUDA.h.

Referenced by ComputeNonbondedCUDA(), and doWork().

| float ComputeNonbondedCUDA::pairlistTolerance |

Definition at line 141 of file ComputeNonbondedCUDA.h.

Referenced by ComputeNonbondedCUDA(), and doWork().

| PatchMap* ComputeNonbondedCUDA::patchMap |

Definition at line 123 of file ComputeNonbondedCUDA.h.

Referenced by assignPatches(), ComputeNonbondedCUDA(), doWork(), register_cuda_compute_pair(), registerPatches(), and requirePatch().

| int ComputeNonbondedCUDA::patchPairsReordered |

Definition at line 138 of file ComputeNonbondedCUDA.h.

Referenced by ComputeNonbondedCUDA(), doWork(), finishReductions(), and recvYieldDevice().

| ResizeArray<patch_record> ComputeNonbondedCUDA::patchRecords |

Definition at line 102 of file ComputeNonbondedCUDA.h.

Referenced by assignPatches(), ComputeNonbondedCUDA(), doWork(), finishPatch(), finishReductions(), finishWork(), messageFinishPatch(), noWork(), register_cuda_compute_pair(), register_cuda_compute_self(), registerPatches(), requirePatch(), and skip().

| float ComputeNonbondedCUDA::plcutoff2 |

Definition at line 144 of file ComputeNonbondedCUDA.h.

Referenced by ComputeNonbondedCUDA(), doWork(), and recvYieldDevice().

| GBReal* ComputeNonbondedCUDA::psiSumH |

Definition at line 113 of file ComputeNonbondedCUDA.h.

Referenced by ComputeNonbondedCUDA(), doWork(), finishWork(), and recvYieldDevice().

| int ComputeNonbondedCUDA::psiSumH_size |

Definition at line 112 of file ComputeNonbondedCUDA.h.

Referenced by ComputeNonbondedCUDA(), and doWork().

| SubmitReduction* ComputeNonbondedCUDA::reduction |

Definition at line 125 of file ComputeNonbondedCUDA.h.

Referenced by assignPatches(), ComputeNonbondedCUDA(), doWork(), finishReductions(), finishWork(), and noWork().

| ResizeArray<int> ComputeNonbondedCUDA::remoteActivePatches |

Definition at line 100 of file ComputeNonbondedCUDA.h.

Referenced by doWork(), recvYieldDevice(), and requirePatch().

| ResizeArray<compute_record> ComputeNonbondedCUDA::remoteComputeRecords |

Definition at line 104 of file ComputeNonbondedCUDA.h.

Referenced by doWork(), finishReductions(), recvYieldDevice(), register_cuda_compute_pair(), and register_cuda_compute_self().

| ResizeArray<int> ComputeNonbondedCUDA::remoteHostedPatches |

Definition at line 101 of file ComputeNonbondedCUDA.h.

Referenced by finishWork(), and registerPatches().

| int ComputeNonbondedCUDA::savePairlists |

Definition at line 143 of file ComputeNonbondedCUDA.h.

Referenced by ComputeNonbondedCUDA(), doWork(), and recvYieldDevice().

| int ComputeNonbondedCUDA::slaveIndex |

Definition at line 131 of file ComputeNonbondedCUDA.h.

Referenced by ComputeNonbondedCUDA().

| int* ComputeNonbondedCUDA::slavePes |

Definition at line 133 of file ComputeNonbondedCUDA.h.

Referenced by assignPatches(), ComputeNonbondedCUDA(), finishWork(), and noWork().

| ComputeNonbondedCUDA** ComputeNonbondedCUDA::slaves |

Definition at line 132 of file ComputeNonbondedCUDA.h.

Referenced by assignPatches(), ComputeNonbondedCUDA(), finishWork(), and noWork().

| float4* ComputeNonbondedCUDA::slow_forces |

Definition at line 110 of file ComputeNonbondedCUDA.h.

Referenced by ComputeNonbondedCUDA(), doWork(), and recvYieldDevice().

| int ComputeNonbondedCUDA::slow_forces_size |

Definition at line 109 of file ComputeNonbondedCUDA.h.

Referenced by ComputeNonbondedCUDA(), and doWork().

| int ComputeNonbondedCUDA::step |

Definition at line 86 of file ComputeNonbondedCUDA.h.

Referenced by finishReductions(), and noWork().

| int ComputeNonbondedCUDA::usePairlists |

Definition at line 142 of file ComputeNonbondedCUDA.h.

Referenced by ComputeNonbondedCUDA(), doWork(), and recvYieldDevice().

| int ComputeNonbondedCUDA::workStarted |

Definition at line 83 of file ComputeNonbondedCUDA.h.

Referenced by ComputeNonbondedCUDA(), doWork(), finishWork(), noWork(), and recvYieldDevice().

The documentation for this class was generated from the following files:

1.8.5

1.8.5